The evolution of the software development life cycle (SDLC) has witnessed diverse iterations. Each of these iterations was imbued with distinctive ideologies and advancements. DevOps, as the predominant force, propels contemporary software development endeavors.

Nevertheless, the seamless integration of security within DevOps remains a significant challenge. As a consequence, DevOps and security teams operate independently, detached from the development process. Security extends beyond the realms of development and operations, necessitating manual handling. This disrupts the harmonious automation inherent in the DevOps cycle.

DevSecOps is a philosophy that extends DevOps philosophy. It encrypts security objectives (integrity, availability, confidentiality, accountability, and assurance) as part of the overall structure.

Generally speaking, DevSecOps is an approach to building products that include security measures at early development stages. Its essence is not to deal with the consequences of weaknesses that are already in the product. But not to allow them to appear.

In this article, we will tell you why DevSecOps is needed at all. You will know what difficulties you may encounter when implementing it in a company and how to overcome them. We will also share tips from Codica's experience on how to implement this practice in your company.

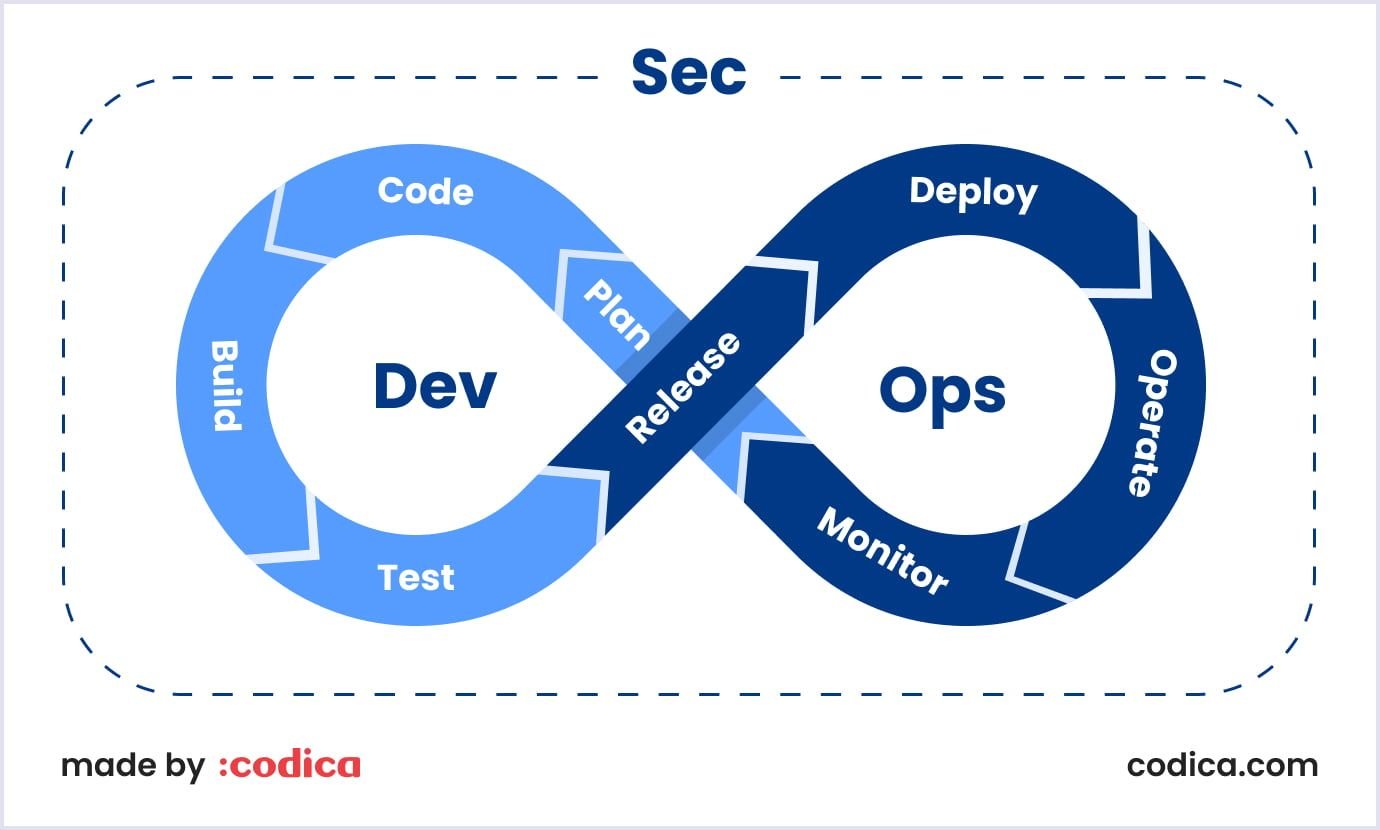

The definition of DevOps security: DevSecOps

The term “DevSecOps” includes three components: development, security, and operations.

- Development: It is the process of planning, coding, building, and testing an application.

- Security: It means implementing a security system earlier in the software development cycle. For example, programmers ensure that code does not contain security vulnerabilities. And, of course, security specialists test the software before a company releases it.

- Operations: The operations team releases, monitors, and fixes any issues that occur in the software.

The purpose of DevSecOps is to help development teams address security issues effectively. This involves cooperation amidst development, operations, and security teams. Thus, security becomes an integral part of the SDLC, from the initial design and development stages to testing, deployment, and maintenance.

DevOps security emphasizes the importance of building secure software by implementing security measures and best practices early on in the software creation process. This approach helps organizations find and address security issues more effectively. Thus, the risk of vulnerabilities and security breaches is much lower.

Also, DevOps security involves the use of automation and continuous monitoring tools to reveal and react to security threats in real time. These tools allow security teams to identify and address vulnerabilities and security issues quickly and efficiently.

Overall, DevOps security is an essential component of a modern software development and delivery strategy. At the same time, agility and speed remain maintained. This is crucial for success in today's fast-paced digital environment.

DevOps security challenges

Implementing DevOps without proper security focus can lead to problems, such as security breaches. Uber's 2016 breach highlights this issue. Then the hackers infiltrated their private GitHub repository, accessed login credentials, and obtained sensitive user and driver data from their AWS environment.

In the case of Uber, an essential step would have entailed performing a comprehensive security scan. This enables the elimination of any possibility of embedded credentials within the code.

Just a simple secret scanning tool could prevent all of it.

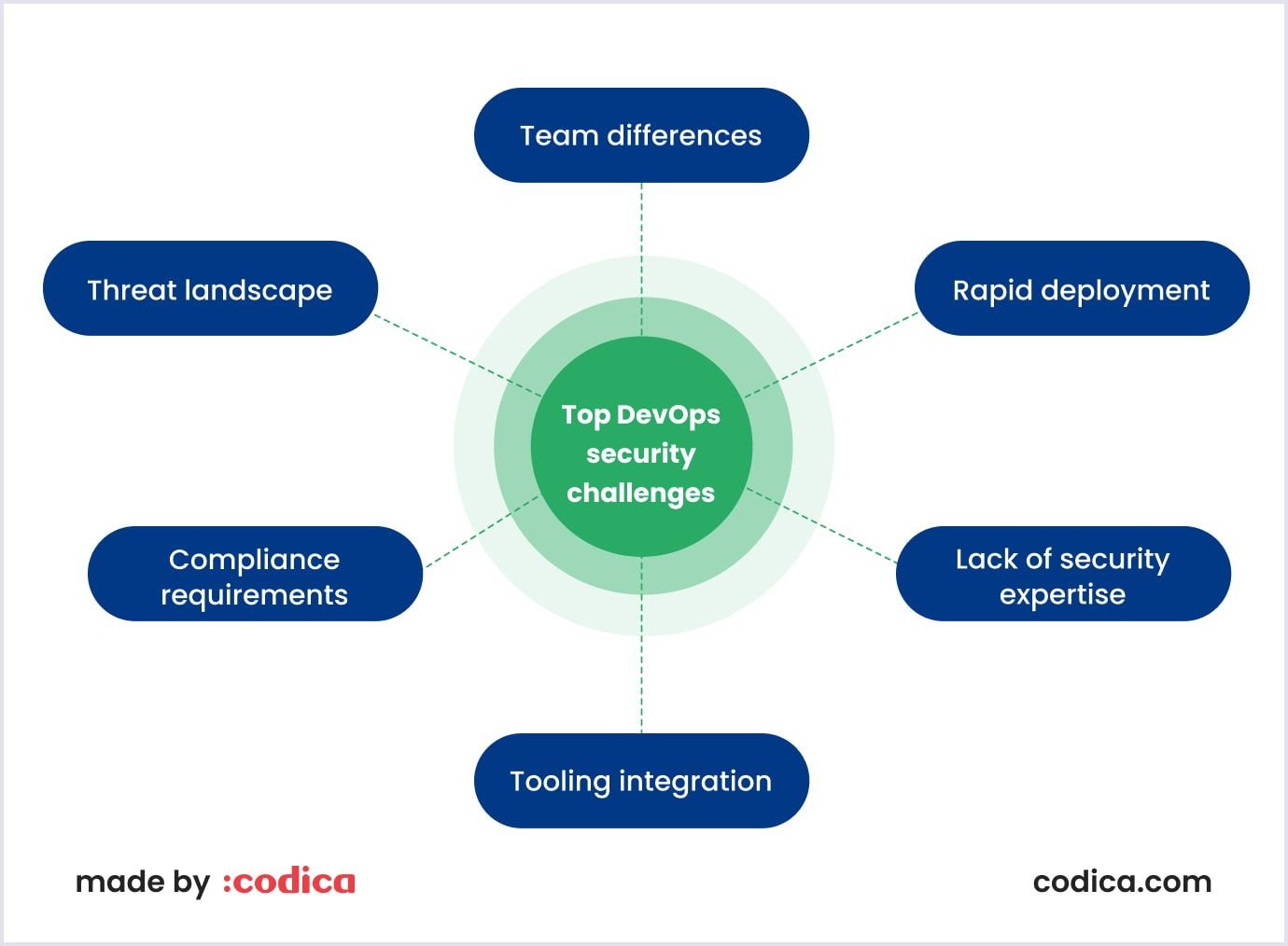

DevSecOps can help build the best security measures possible are introduced throughout the app creation cycle. Nonetheless, organizations incorporating security within their DevOps processes face several challenges posed by DevOps security.

In the diagram below, you can see the most common issues when implementing DevSecOps.

Let’s reveal these issues in more detail.

Team differences

DevOps security requires close collaboration between traditionally siloed teams such as development, operations, and security. This can be challenging due to differences in goals, processes, and priorities.

Facilitate effective communication channels to ensure that every team comprehends its unique roles and responsibilities within the DevSecOps process. Establish clear expectations and accountabilities for each team. This help promotes transparency and prevents any overlap in responsibilities.

Rapid deployment

DevOps security often involves frequent code changes and continuous deployment. This can cause difficulties in maintaining security controls and ensuring that security is not compromised during the process.

So, you should embed security practices into every phase of the software creation process. Integrate security testing and code reviews. Also, you need to embrace vulnerability scanning into the CI/CD (continuous integration/continuous delivery) pipeline to catch security issues early on. Encourage developers to take ownership of security by providing them with the necessary training and resources.

Lack of security expertise

Many DevOps teams may lack security expertise. This problem makes it hard to discover and address security vulnerabilities and threats. What's the solution?

You can invest in training and upskilling your DevOps teams in security practices and principles. Offer security DevOps awareness programs, workshops, and certifications to bridge the expertise gap. Consider partnering with external security experts or consultants to provide guidance and support when needed.

Tooling integration

DevOps security requires the integration of security tools into the DevOps pipeline, which can be challenging due to compatibility issues, configuration complexities, and maintenance requirements.

Select and integrate security tools that align with your DevOps processes and are compatible with your technology stack. Evaluate tools that offer seamless integration, easy configuration, and maintenance. Leverage containerization and infrastructure-as-code practices to ensure consistent tooling across different environments.

Compliance requirements

DevOps security must comply with various regulatory requirements, comprising data privacy regulations. These are GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act). These regulations can both be challenging to maintain.

So, you can implement automation and version control for compliance requirements. Use infrastructure-as-code to define and maintain compliant configurations. Regularly audit and test your systems against compliance standards. Also, leverage security frameworks and established guidelines to streamline compliance efforts.

Threat landscape

The landscape of threats is constantly evolving. New threats and vulnerabilities arise every day. Keeping pace with the newest security trends and developments can be a challenge for DevOps teams.

Stay updated on the evolving threat landscape by monitoring security news. For example, you can subscribe to relevant mailing lists and participate in industry forums. Leverage threat intelligence services to identify and prioritize potential risks. Encourage collaboration and information sharing with other companies to learn from their experiences and useful practices.

To address these challenges, organizations can establish clear security policies and procedures. You can implement a robust security testing framework and train DevOps teams in security best practices. Also, leverage automated security tools for DevOps to detect and respond to threats in real time. Additionally, engaging with third-party security experts can help organizations address security gaps and improve the overall security posture.

Key recommendations to enhance DevOps

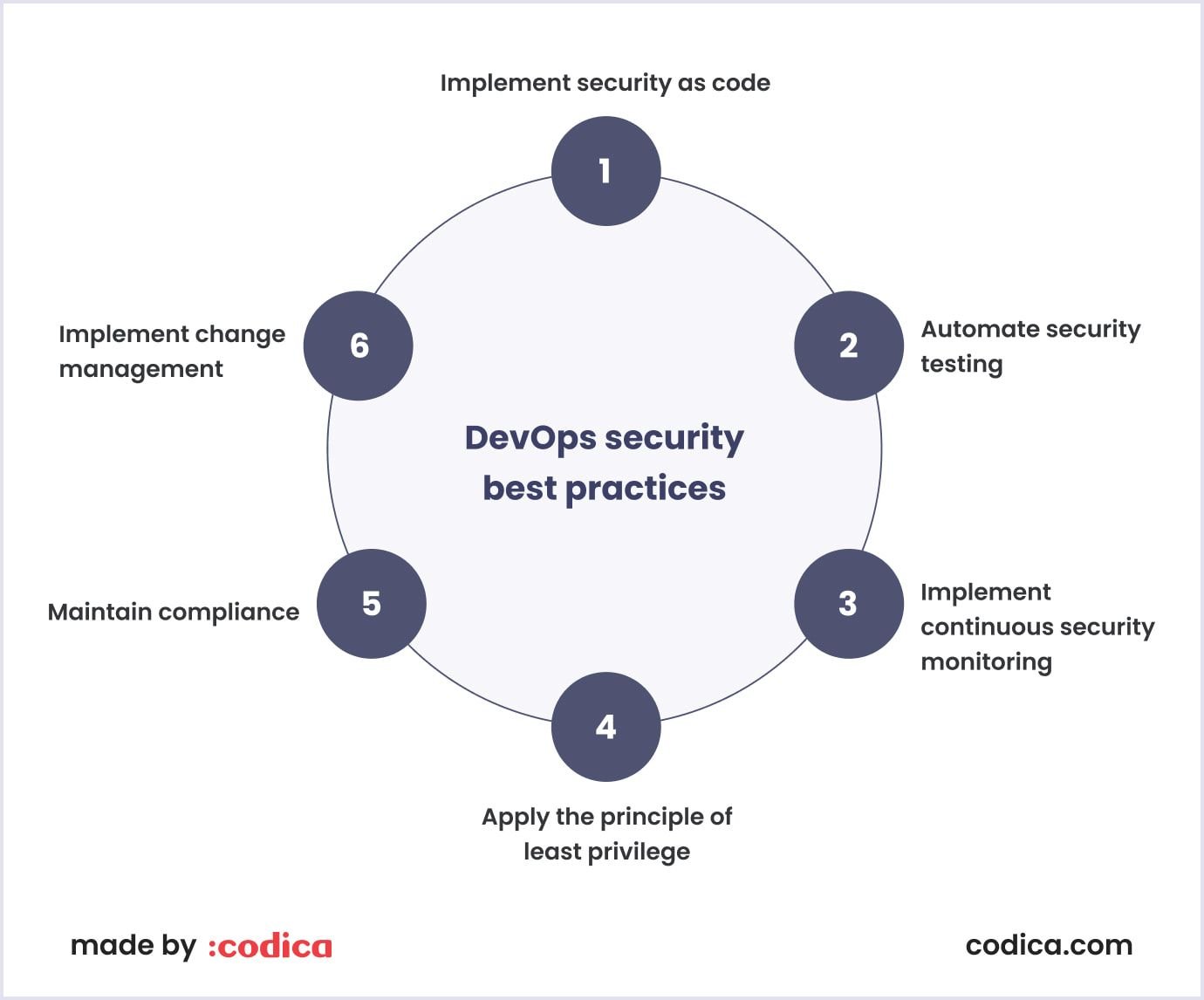

DevOps security is a set of practices aimed at integrating security into the DevOps process. Below there are some DevOps security best practices:

Implement security as code

Implementing a comprehensive DevSecOps model is crucial to embedding security within DevOps pipelines. For this, you need to foster a culture wherein everyone shares responsibility for security.

Security teams should possess the capability to write code and engage with application programming interfaces (APIs). At the same time, developers should be empowered to automate security-related tasks. So, embed security controls and policies into your code, infrastructure, and deployment processes. You can use tools like Terraform or CloudFormation templates.

Automate security testing

Use automated security testing tools to detect vulnerabilities in code and infrastructure early in the development process. These are static analysis tools, dynamic analysis tools, and vulnerability scanners.

Automating the security testing process enables the expedited and streamlined identification of security vulnerabilities. Network environments can be scanned with enhanced speed and meticulousness using automated tools. This accelerates the testing procedure and minimizes the overall time and resources invested in the process.

Implement continuous security monitoring

Clearly determine the aims of your continuous security monitoring program. Deploy automated security monitoring tools to detect and respond to security incidents in real time. These are such tools as intrusion detection systems, log analysis tools, and SIEMs (security information and event management) platforms.

You need to define the critical data sources that must be monitored. This can comprise logs from servers, apps, network devices, cloud platforms, and security tools.

Apply the principle of least privilege

This is one of the DevOps security best practices. This means you limit access to production environments and data to only those who need it to fulfill their job functions.

With this practice, malicious actors' potential entry points are significantly reduced. If a user or system is compromised, the damage they can inflict is limited to specific permissions. Thus, you can protect the entire system or network.

Maintain compliance

Maintaining compliance in DevSecOps is vital to align security practices with regulatory requirements and industry standards.

You should ensure that your DevOps processes and security controls comply with relevant regulations, such as PCI DSS or HIPAA. Keep up-to-date with the newest security threats. Know the best practices, and regularly review and update your security procedures and policies.

Implement change management

You need to define change management processes. They should outline how changes are managed, implemented, and validated. Use guidelines to test and approve modifications before they are implemented in production.

It is essential to perform thorough impact assessments for proposed changes. Thus, you can evaluate their influence on security, compliance, operations, and other system aspects. Constantly monitor changes to verify they impact appropriately and not introduce new vulnerabilities.

By implementing these best practices, companies can integrate security into their DevOps processes and reduce the risk of security incidents and data leaks.

DevOps security tools

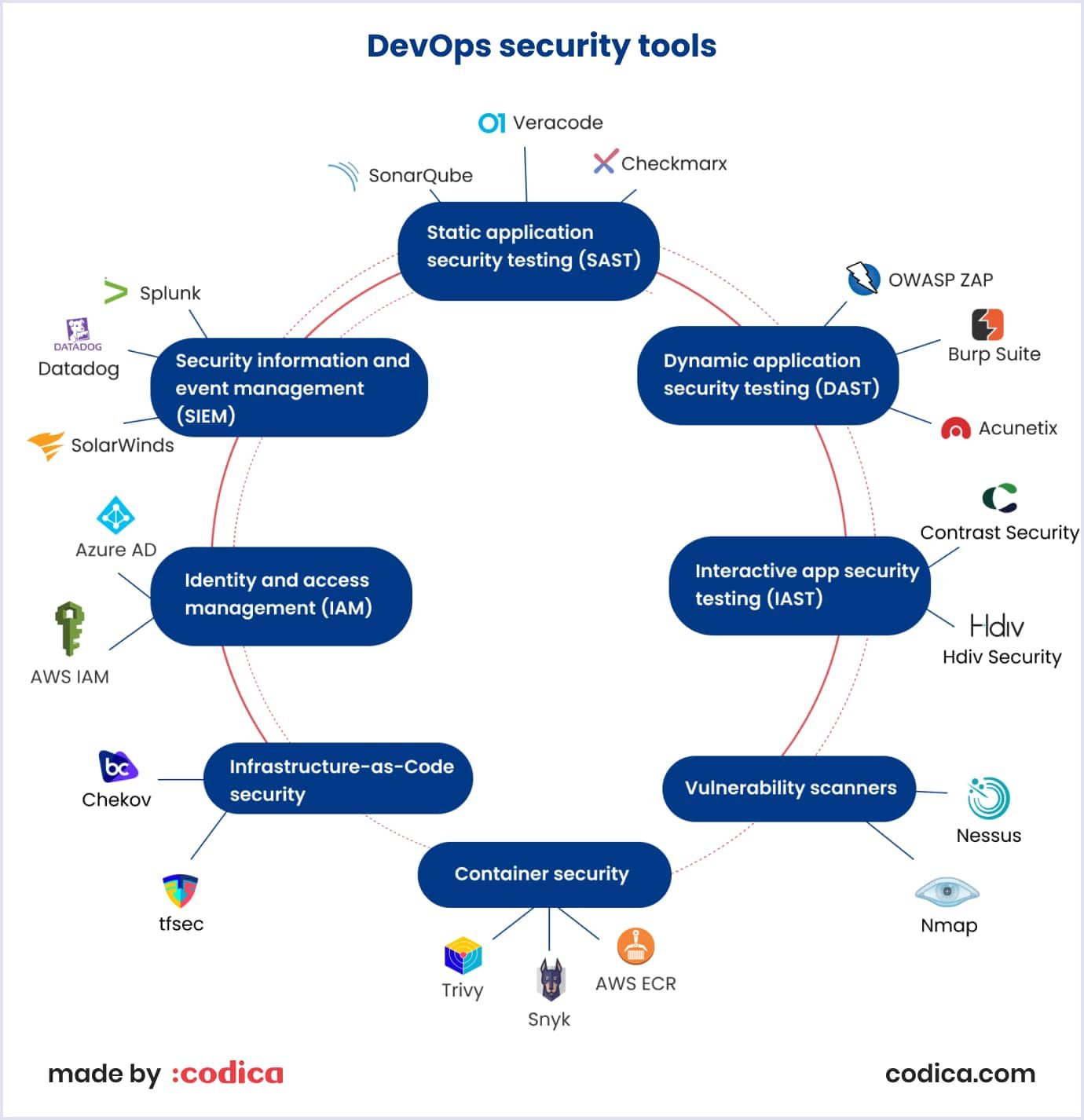

DevOps security involves using various tools to integrate security into the DevOps pipeline. Here we collected some common DevOps security tools:

Static application security testing (SAST) tools: These tools analyze code for security vulnerabilities during the development process. Examples comprise SonarQube, Veracode, and Checkmarx.

Dynamic application security testing (DAST) tools: These tools test running apps for vulnerabilities in real-time. Examples include OWASP ZAP, Burp Suite, and Acunetix.

Interactive app security testing (IAST) tools: These tools combine SAST and DAST, integrating security testing into the application itself. Examples include Contrast Security and Hdiv Security.

Vulnerability scanners: These tools scan networks and applications for vulnerabilities. For example, these are Nessus and Nmap.

Container security: Such tools provide security controls for containerized applications and infrastructure. Examples comprise Trivy, Snyk, and cloud built-in solutions (for example, AWS ECR has a container scanner).

Infrastructure-as-Code security: These tools scan cloud infrastructure for vulnerabilities and misconfigurations. For instance, these tools include Chekov and tfsec.

Identity and access management (IAM) tools: IAM tools help to control access to resources and protect against unauthorized access. Examples include AWS IAM and Azure AD.

Security information and event management (SIEM) tools: With these tools, you can analyze DevOps information security and gather event data from various sources. Thus, you can detect security incidents and breaches. These are tools such as Splunk, Datadog, and SolarWinds.

By using all these tools, organizations can integrate security into their DevOps pipeline. So they will be able to detect and respond to security incidents quickly. Also, these tools are helpful in reducing the risk of security incidents.

Read also: Web Application Security: The Ultimate Guide

DevOps security testing

What means testing in DevOps security? It is the process of checking the security of code, infrastructure, and applications as part of the DevOps pipeline.

The test phase begins after the build artifact has been generated and successfully deployed to staging or test environments. Comprehensive testing takes a lot of time. But failures at this stage should be detected as soon as possible.

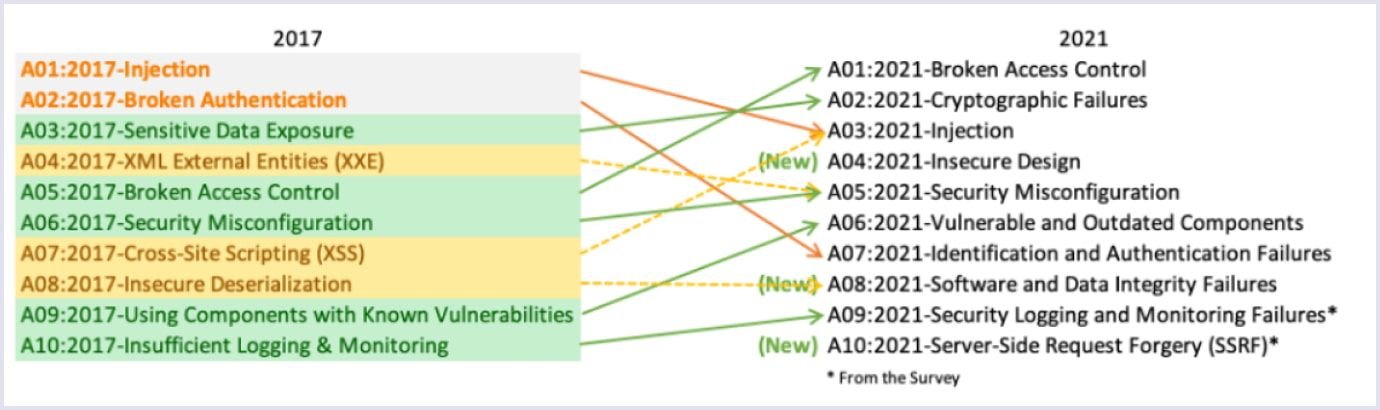

The testing phase uses DAST tools. They help identify app workflows: authorization, user authentication, SQL Injection, and API-related endpoints. DAST testing is security-focused and helps you test your application for known, high-severity issues (such issues are listed in the OWASP Top 10 list).

There are many testing tools available, both paid and open source. They have different functionality and support numerous ecosystems of programming languages. Among these tools- SpotBugs, Anchore, Brakeman, Clair, Aqua, WhiteHat Security, OWASP, and others.

Here are some common types of DevOps security testing:

Static app security testing (SAST). SAST tools scan the code for weaknesses. These are SQL injection, cross-site scripting, and buffer overflow.

Dynamic application security testing (DAST). DAST tools test running applications for vulnerabilities in real time. These weaknesses can include injection attacks, cross-site scripting, and broken authentication.

Container security testing. Container security testing involves scanning container images for vulnerabilities, misconfigurations, and other security risks. This testing is essential when using containers in a DevOps pipeline.

Infrastructure-as-Code (IaC) security testing. IaC security testing involves testing cloud infrastructure for security risks and misconfigurations. It is crucial to prioritize the security and compliance of cloud infrastructure, adhering to industry standards and regulations.

Penetration testing. Conducting simulated cyberattacks through penetration testing helps identify system vulnerabilities and weaknesses. Typically performed by third-party security experts, this process enhances overall system security.

Compliance testing. Compliance testing ensures that systems meet regulatory requirements such as HIPAA (Health Insurance Portability and Accountability Act), PCI DSS (Payment Card Industry Data Security Standard), and GDPR. By verifying adherence to these standards, organizations can mitigate risks and ensure data protection.

Threat modeling. Employing threat modeling techniques enables the identification of potential security threats and vulnerabilities. It involves assessing the likelihood and impact of a security incident. This assessment allows for targeted risk mitigation strategies.

By incorporating these types of security testing into the DevOps pipeline, organizations can establish robust security measures. Integration of security throughout the entire development and deployment process ensures that systems remain secure at each step. This can help prevent security incidents, data breaches, and other security-related issues.

Codica’s clients can be sure that our security experts vet software apps while they are being developed and supported.

Codica experience and tips

At Codica, we use DevOps and DevSecOps practices to streamline the software development process and create secure projects. Security practices we apply in our DevOps services help to protect all parts used in different deployment processes.

An example of our successful project delivery is the development of CakerHQ, a SaaS-based platform tailored for bakery businesses. This innovative website simplifies the process of finding and booking desserts. It also optimizes business administration. Our team ensured that the solution was built to be modern and efficient.

To get a glimpse of CakerHQ in action, check out the video below.

Let's delve into the key contributions made by our diligent DevOps engineer during the course of this project:

- Planning the project's architecture in AWS (Amazon Web Services).

- Implementing infrastructure as code (IaC) for CakerHQ using Terraform, prioritizing security throughout.

- Configuring end-to-end CI/CD pipelines for both the front and back end.

- Authoring Dockerfile and conducting comprehensive testing of all container-related aspects.

- Ensuring the flawless functionality of the containers, leaving no room for errors.

- Setting up continuous monitoring and alerting systems, enabling rapid notifications in the event of unresponsiveness or server issues, ensuring swift resolution.

- Configuring monthly usage reports for the client's convenience.

- Establishing robust access security measures, guaranteeing the protection of sensitive data.

- Developing essential automation processes eliminates bottlenecks and minimizes failures and data loss.

- Implementing error-tracking systems like Sentry, aiding in prompt issue identification and resolution.

Following the successful launch of the CakerHQ project, we optimized costs according to their specific requirements. As a result, our client has a secure and robust solution.

We are glad to share with you some tips that we use for building our efficient DevSecOps culture. Let’s consider the main components of our projects’ security.

Infrastructure security

At Codica, we use the infrastructure as code (IaC) approach to infrastructure management through code instead of through manual processes. But why is infrastructure as code so critical?

The benefits of IaC are the following:

- The option to scale out automatically.

- The cost savings because you don't need to pay for the over-provisioned hardware.

- The ability to push massive security processes to the primary cloud provider.

We enable AWS API logs using CloudTrail (a service for an ongoing record of events in an AWS account) and save them in log groups. Thus, any action a person makes can not be denied, and every step is recorded. Besides that, we use metric filters. So, if someone does something extraordinary, we instantly get notified about it.

At Codica, we like Terraform in AWS for our web projects. Terraform is one of the most popular tools for implementing infrastructure as code. It allows creating and updating the AWS infrastructure.

Besides, we always follow recommendations from tfsec (security scanner for your Terraform code) for infrastructure improvement. Also, we recommend using this security group rule resource. It will allow you to change security groups without recreation.

Inventory and config management

Inventory and configuration management are helpful when we want to know how our architecture changed. For example, if our server has become more powerful, we can use the configuration recording to understand it.

In short, inventory and configuration management are needed to store all data about the current state of architecture. So, it helps us understand how our configuration and architecture changed.

We use tfsec, terrascan, and dritfctl for security scans and config recording (with Terraform states). Also, we use Cloudwatch metrics filters against the CloudTrail log group. Thus, we are alerted about configuration changes. That is why we don't use AWS Config that much. However, we still do use its config recording.

Thanks to Terraform, we have many useful features built-in. For example, they enable us automatically check the architecture price and see how it changes all the time.

Besides, Terraform allows us to implement these features at almost any scale. In doing so, we still have config recorded with Terraform state and cost management.

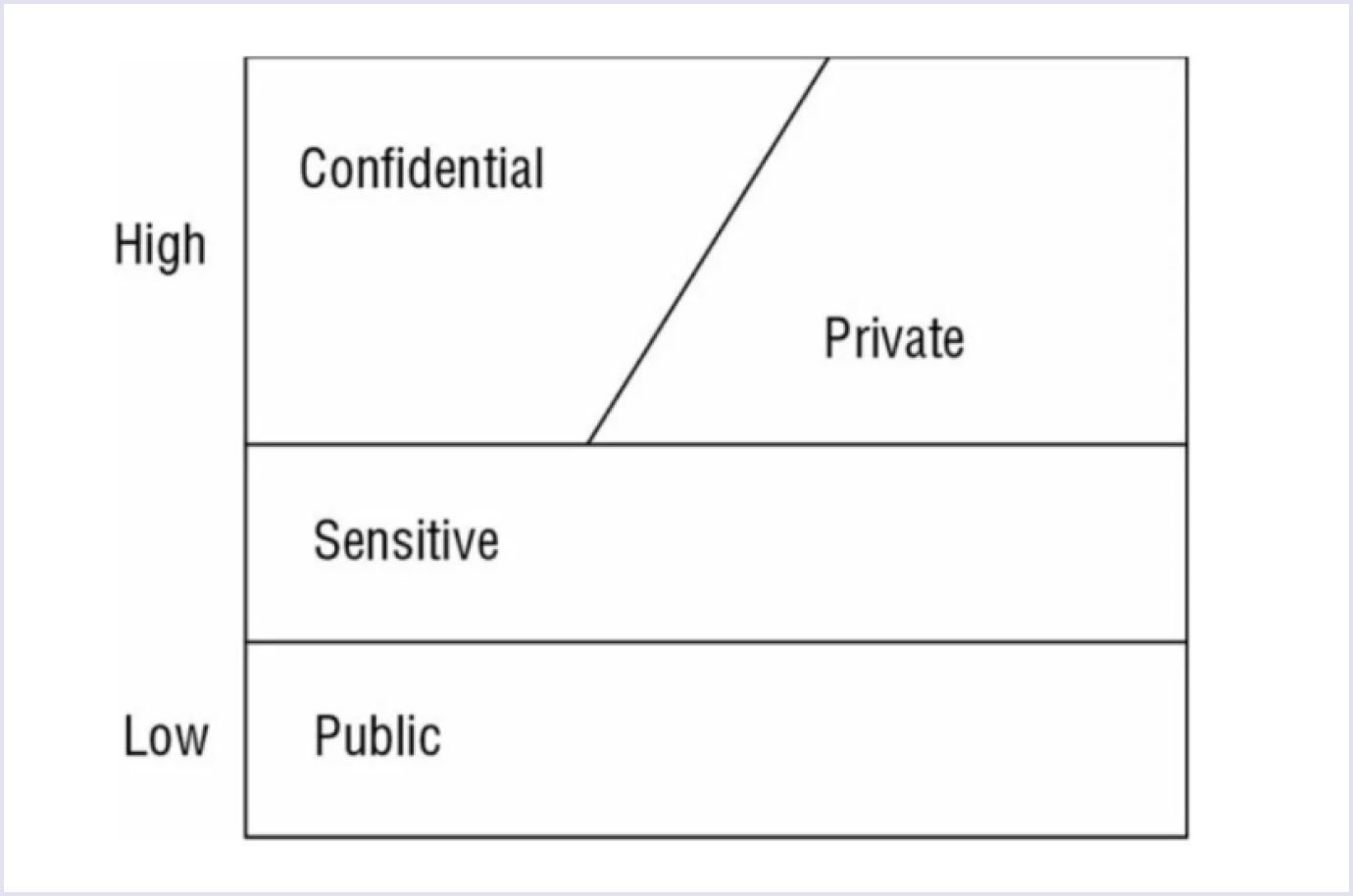

Data encryption

What means data encryption? It is a technical process in which information is converted into a secret code. Data encryption hides the data that you send, receive, or store.

In general, encryption is one of the best ways to protect your business's sensitive data from cyber threats. It helps to build a secure architecture of the network.

We do not encrypt everything because this is not efficient.

In the image below, you can see data examples that are sensitive and not. So we can determine what qualifies as sensitive information. Confidential and private data have high data sensitivity levels. These can be database users or credentials. Conversely, public assets have a low level of sensitivity.

Source: (ISC)² CISSP Certified Information Systems Security Professional Official Study Guide

So, it’s important to understand what is sensitive and what is not.

We encrypt all confidential data with KMS keys (Amazon service that allows the creation and control of keys that encrypt data stored in AWS). Also, we use Terraform for encryption.

Identity and access

Without the proper access control in-placed, anyone can access the environment and perform unintended actions.

As you may have already noticed, we use Terraform a lot. We create users and configure their policies with Terraform. Why? Thus, we can keep track of them but do not create keys not to keep them in our Terraform state. We just generate them manually.

We also enable MFA (multi-factor authentication) on each console user. We always ask our clients to enable MFA on root and delete root keys. Using secret scanners, we ensure that we don't have any keys in code or in Terraform configuration.

Sometimes we provide access to the AWS console for our developers. In these cases, we use strict policies.

Monitoring and logging

Simply put, logging is a record of everything that happens in the application and environment. This record can happen by writing to files and log streams.

Monitoring is about setting up tools that monitor the state of software and the servers it runs on. Good monitoring ensures that we get notified about problems a few seconds after they appear. Also, it enables us to see a lack of performance and change servers' capacity.

During the time we implemented DevOps in Codica, we had to change monitoring a few times and find the one that suited our needs the best. As a result, we’ve chosen Prometheus with Alertmanager, the tool for alerting and monitoring.

Security of containers

Having secure web solutions architecture is good, but application and container security is also essential.

Custom users for your container are a must. However, you should remember that by being a root in the container, a user has significantly fewer privileges than a root on the host. For example, the last one can't bind lower ports. Using kernel caps, we can improve the non-root access control.

So, why is it essential to have a different user? Because it's very easy to escape from the container and become a root host. You can read more in this blog post about Docker container escapes, and there is also a list of requirements to escape as root and practical examples.

In short, the general recommendation is to use a private registry and encrypt it if possible. Also, use scanners on build and registry built-in. Don't create containers in a way that they download anything at the start.

Conclusion: play it safe

The primary objective of this article is to emphasize the significance of transitioning towards secure development as a pivotal step in crafting high-quality IT products. It is imperative to proactively consider security right from the initial phase of the project life cycle rather than as an afterthought. However, it is vital to recognize that DevSecOps is not a ready-made solution. Establishing security in DevOps requires a well-defined roadmap to be realized within any organization.

Surely, implementing secure development practices ultimately yields cost savings. This occurs due to minimizing error fixes and optimizing development time. Additionally, DevSecOps fosters improved collaboration among team members. This approach ensures a clear delineation of roles. And most importantly, it facilitates the creation of more advanced and secure products for end-users.

If you are looking for a reliable QA software testing company to implement your idea, contact us. We are always eager to help you develop a robust and secure web product!