AI agents are often described as complex or experimental. But in practice, they are engineered systems with clear roles and limits.

They combine data, models, and logic to perform specific tasks autonomously, such as answering questions, detecting patterns, or triggering actions. Understanding how these components fit together is the key to building an effective AI agent.

In this guide, we walk through each step of the process clearly and practically.

Understanding AI agents and their use cases

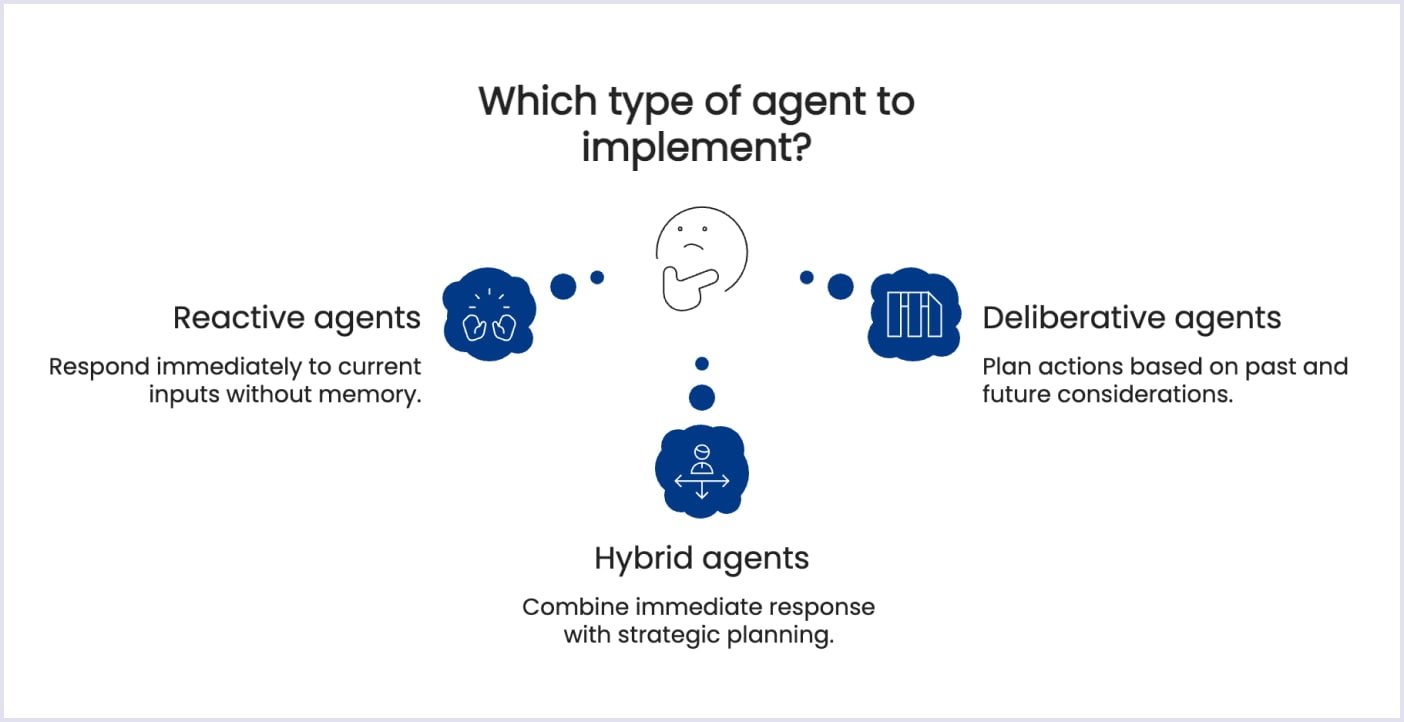

Let’s start with a definition. AI agents are systems that work with the environment they’re in, have an advanced agent decision engine, and take actions to achieve certain goals. These agents improve user experience, reduce operational costs, and unlock scalability. AI agents are becoming core components in modern product strategies, since they help with a variety of tasks. Nowadays, there are several types of agents.

Reactive agents

These agents respond directly and immediately to what they see or sense right now. They are unable to preserve any memories or prior encounters. Rather, they only respond to the current input.

As an example, imagine a basic tool that keeps track of server status, for instance. When a server goes down, it immediately sends out an alert. Without considering previous or upcoming circumstances, this result is based only on the present state (server status).

Deliberative agents

These agents can think ahead. They plan actions based on the past and current situation. Besides, they can project future results. They compose and use an internal model (like a mental map) of their environment to decide the best actions to achieve a given goal.

As an example, you can think of personalized recommendation engines. Amazon-style recommendation systems that use your past browsing and buying history, along with user data patterns, to suggest products you might like in the future.

Hybrid agents

These blend the thoughtful planning of deliberative agents with the prompt responses of reactive agents. Hybrid agents work strategically because they can recall prior events and forecast results, but they can respond quickly when necessary (for safety or urgency).

A great example of a hybrid agent is a DevOps tool called CI/CD (Continuous Integration/Continuous Deployment). Its automated pipelines instantly trigger actions when code changes, which means they’re reactive. Simultaneously, they analyze deployment patterns or past events to anticipate and resolve future integration issues, which means they’re also deliberative.

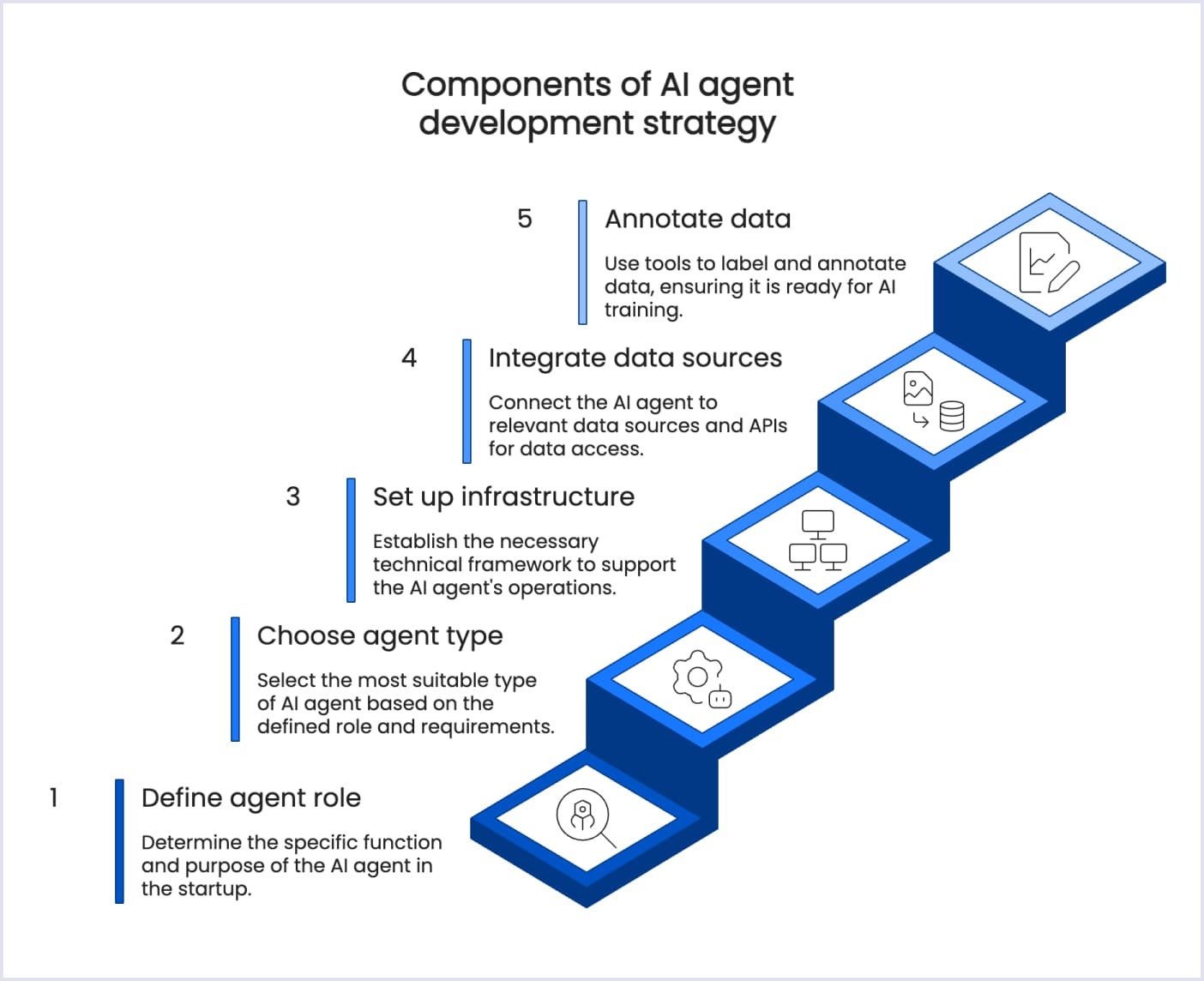

Planning your AI agent development strategy

A successful AI workflow automation integration starts with a clear development strategy. For example, you could begin by looking at specific business problems that the agent is designed to tackle. This may involve minimizing support load, speeding up onboarding, or detecting irregularities.

Next, define measurable key performance indicators (KPIs) such as response time reduction, accuracy, or user satisfaction, and align them with overall business goals.

Defining the agent’s role in your startup

More importantly, you need to narrow the scope and see what your AI agent is responsible for in the product.

- Should the agent answer common customer questions?

- Should it know how to flag suspicious transactions?

- Should it help users and guide them through product setup?

Starting with a focused, high-impact use case increases your chances of early success and faster iteration.

Choosing the right type of AI agent

Finding a method to match your use case with an appropriate sort of AI agent is another important consideration. For predictable tasks with clear logic, rule-based agents perform effectively. When pattern identification is needed in data-rich contexts, machine learning agents perform exceptionally well. For dynamic user interaction, conversational AI agents, which are frequently driven by LLMs, are perfect.

Setting up the data infrastructure

A trustworthy AI agent is built on top of high-quality data. Determine the data you'll need to train and assess your model first. To guarantee consistency and eliminate noise, clean and preprocess this data. Labeled data is essential for supervised learning. Use versioning to safely store your datasets for compliance and traceability.

Data sources and APIs

Make use of a variety of data sources, such as your CRM, support tickets, product analytics software, and external APIs such as market or social media sentiment data. Additionally, training can be enhanced with public datasets, particularly for fundamental tasks like intent classification or language modeling.

Data annotation and labeling tools

For high-performing models to be trained, accurate labeling is necessary. Annotate data using programs like Amazon SageMaker Ground Truth (completely managed with human-in-the-loop workflows) or Label Studio (open-source and customizable). For optimal productivity, use tools that facilitate teamwork and interface with your current workflows.

Selecting the right AI/ML framework and tools

An AI agent is not a single model or tool. It is a system that combines models, logic, data, and integrations. The goal of the tech stack is to support this system and allow it to evolve.

Model and framework layer

Frameworks such as PyTorch and TensorFlow are commonly used to train and fine-tune machine learning models. They are well-suited for tasks like prediction, classification, and anomaly detection. For agents that process text or interact with users, large language models are often added to handle understanding and decision-making.

Orchestration and logic

Modern AI agents rely on orchestration layers that connect models with tools, business rules, and external data sources. This makes it possible to manage complex workflows without embedding all logic into a single model.

Infrastructure and deployment

Cloud platforms like AWS, Google Cloud, and Microsoft Azure provide scalable infrastructure for training, real-time inference, and monitoring. When selecting a platform, consider scalability, security, data residency, and compatibility with your existing systems.

Read also: 10 AI Tools Transforming Web Development in 2026

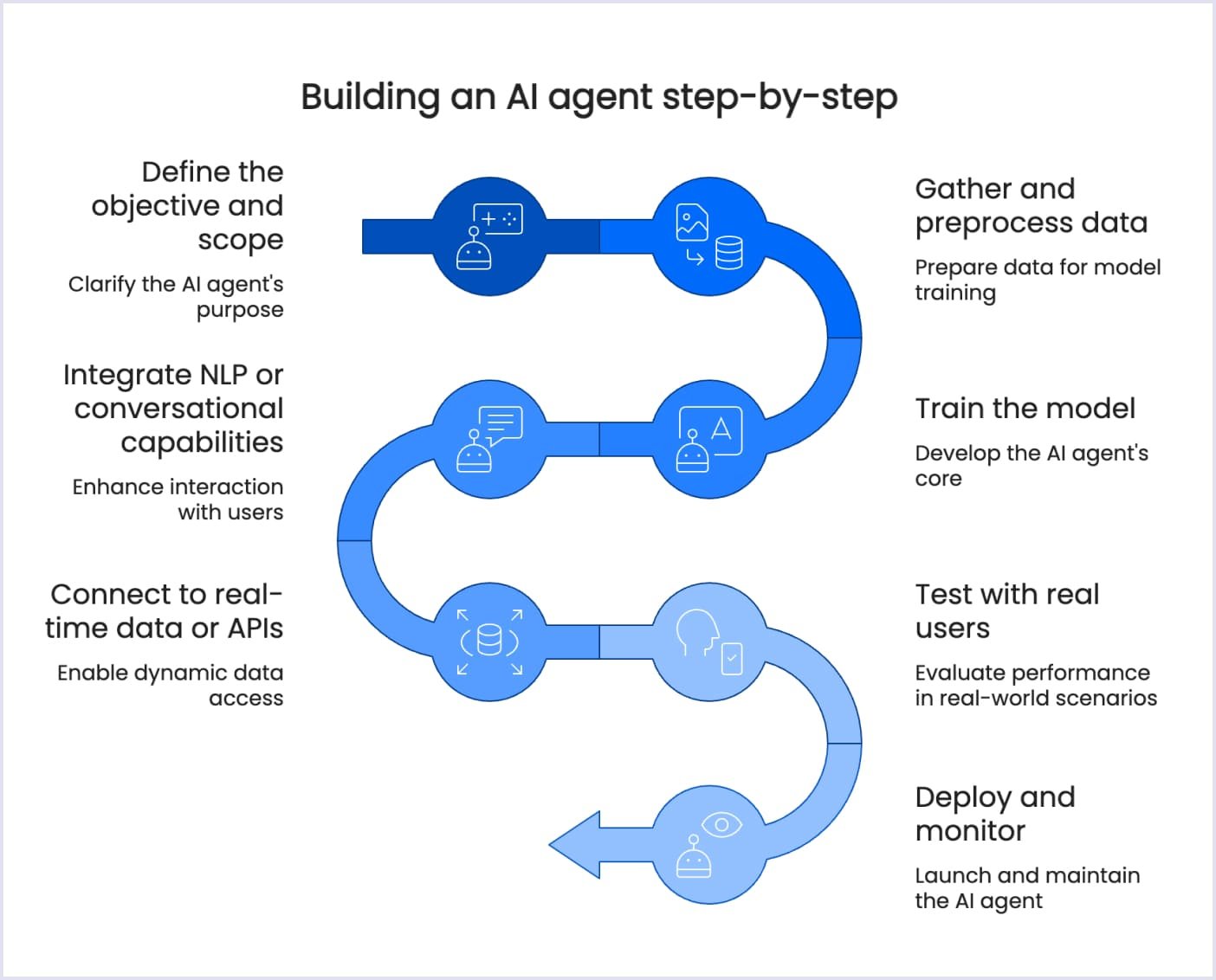

Step-by-step guide to building an AI agent

Step 1: Define the objective and scope

The first step is always the product discovery services we offer at Codica. In this case, it helps us clearly outline the primary purpose of your AI agent. Our team forms a focused, measurable objective and clearly defines the scope. As mentioned earlier, we set KPIs early, all to track the agent’s success throughout the development cycle. Here’s a more detailed look at the product discovery session.

Step 2: Gather and preprocess data

The performance of AI and ML agents is critically dependent on the quality of the data used in their development. Therefore, working closely together, we gather extensive datasets pertinent to your goal, including labeled intent data or consumer interaction logs.

They are then cleaned to get rid of any extraneous material, duplication, and inconsistencies. To ensure that your AI agent learns from the correct input, preprocessing may involve augmentation techniques to enrich small datasets, normalization for numerical data, or tokenization for text.

Step 3: Train the model

Training an AI agent usually starts with pre-trained models, which help reduce development time and achieve strong initial results. These models can be fine-tuned with domain-specific data to better match real business tasks.

Training from scratch is typically needed only when the domain is highly specialized, strict control is required, or sufficient data and resources are available. In both cases, models must be evaluated regularly, tuned through iteration, and tested against real scenarios to ensure reliable performance.

Step 4: Integrate NLP or conversational сapabilities (if applicable)

If an AI agent needs to work with text or users, language understanding becomes essential. Modern agents often rely on large language models to interpret input, manage context, and support decision-making.

Conversational behavior is controlled through structured workflows that define responses, handle edge cases, and maintain consistency. This approach allows AI agents to interact naturally while remaining predictable and easy to manage.

Step 5: Connect to real-time data or APIs

Another important step is to make sure that the AI agent can dynamically respond to changing conditions. This is done by integrating real-time data streams or APIs. Notably, API connections must be treated carefully, with ways to handle errors, retries, and data validation. This helps maintain performance reliability under various conditions.

Step 6: Test with real users

QA services are just as crucial as other procedures. Therefore, in order to confirm the agent's practical performance, our team runs organized testing with actual end users. We use A/B testing, beta deployments, and usability tests to collect both qualitative and quantitative input.

We next examine the input to identify any unanticipated problems or areas for development so that we may make the necessary adjustments to your model. This guarantees that your agent develops in accordance with user expectations and actual situations.

Step 7: Deploy and monitor

Once everything is done and dusted, it’s time for AI model deployment using continuous integration and continuous deployment (CI/CD) pipelines for reliable releases. At Codica, we often choose deployment environments like AWS, Azure, or container platforms such as Docker and Kubernetes for scalability.

We monitor agent performance closely post-deployment, using plenty of monitoring tools (Prometheus, Grafana) to track real-time metrics and user interactions.

Ensuring ethical, compliant AI agent development

AI agents must be designed with transparency, fairness, and human control in mind. Teams should clearly understand how decisions are made, what data is used, and how the system behaves in edge cases. This makes AI easier to audit, debug, and safely operate.

Fairness requires continuous monitoring. Training data and agent outputs should be reviewed regularly to detect biased or inconsistent behavior and corrected when needed. Bias management is an ongoing process, not a one-time check.

Human oversight is mandatory. AI agents automate decisions, but responsibility always remains with people. Critical actions must allow review, escalation, and override.

AI agents must also comply with data protection and privacy regulations. This includes data minimization, secure storage, consent management, and audit logs. Strong compliance reduces legal risk and supports long-term stability.

Optimizing AI agents post-launch

AI agents require continuous improvement after deployment. Real usage reveals patterns, errors, and limitations that cannot be fully predicted during development.

Optimization starts with monitoring real-world performance, including accuracy, reliability, and user interactions. User feedback and operational data should directly inform updates.

Most improvements are incremental. Fine-tuning models, adjusting decision logic, or updating workflows is often more effective than full retraining. Any change should be validated through testing to avoid regressions.

Treating optimization as an ongoing process keeps AI agents relevant, reliable, and effective over time.

Bottom line

To wrap things up, AI agent development is not an easy task. Instead, we like to render it as a complex task with a lot of things to be developed. Thus, given the scope and the complexity, our custom software development services are the way to go. With our team’s expertise, you can expect a reliable solution that meets all requirements, has the necessary features, and is easy to implement and work with.

Hence, feel free to contact us to get a quote. In the meantime, pay our portfolio a visit! It features a variety of solutions we built, from advanced marketplaces to smart SaaS solutions and apps.