Weaviate is a vector database that helps store large datasets for search engines, recommendation systems, and audio/video resources. Vectors, or vector embeddings, are numbers that represent data objects. When structured in specific dimensions, vectors help search words, sentences, images, audio, and video.

Companies and startups that invest in artificial intelligence solutions and integrations will benefit from vector databases. Such databases are helpful for processing large chunks of data with high precision and scalability. Thus, vector databases enhance the user experience and bring you more prospects and customers.

This blog post discusses why vector databases are helpful for modern solutions. Also, our experts share how you can integrate the Weaviate vector search engine into a solution for better processing data. At the end of the article, we reveal our experience of integrating Weaviate into a recruitment platform to improve HR specialists’ experience.

Now, let’s discover how Weaviate and vector databases shape modern solutions and help deliver a better user experience.

Weaviate: a brief description

It is an open-source vector database that stores data to be used by artificial intelligence (AI) and machine learning (ML) models. Weaviate offers data storage on a deeper level than traditional databases. So, it gives businesses significant benefits thanks to data management and security features. Also, developers can integrate Weaviate during web application development to create custom search, recommendation systems, or plugins for ChatGPT.

In 2022, Weaviate open-source downloads crossed the two million mark. In April 2023, its Series B funding raised $50 million.

Despite being a novelty on the market, Weaviate embraces established practices and new approaches. So, the advantages of these methods for searching and presenting data are combined in this tool. Let’s see what benefits this database offers:

- Open source: Weaviate is open source, meaning anyone can use it wherever they want. Also, Weaviate is a managed software as a service (SaaS) solution that you can scale as needed. You can opt for Weaviate’s SaaS or hybrid SaaS alternatives;

- Hybrid search: the database lets you store data objects and vector embeddings. This method allows you to combine keyword and vector searches for prime results;

- Horizontal scalability: you can scale Weaviate into billions of data objects as you need, with maximum ingestion, dataset size, and queries per second;

- Instant vector search: you can perform a similarity search over raw vectors or data objects, even with filters. A typical nearest-neighbor search of millions of objects in Weaviate lasts considerably less than 100 ms;

- Optimized for cloud-native environments: Weaviate is designed as a cloud-native database with the relevant fault tolerance and ability to scale with workloads;

- Modular ecosystem for smooth integrations: you can bring your vectors to Weaviate or use optional modules that integrate with OpenAI, Cohere, and Hugging Face. So you can enable ML models. Developers can use modules to vectorize data or extend Weaviate’s capabilities;

- Weaviate API options: clients can use RESTful or GraphQL APIs and choose to call APIs to interact with the database.

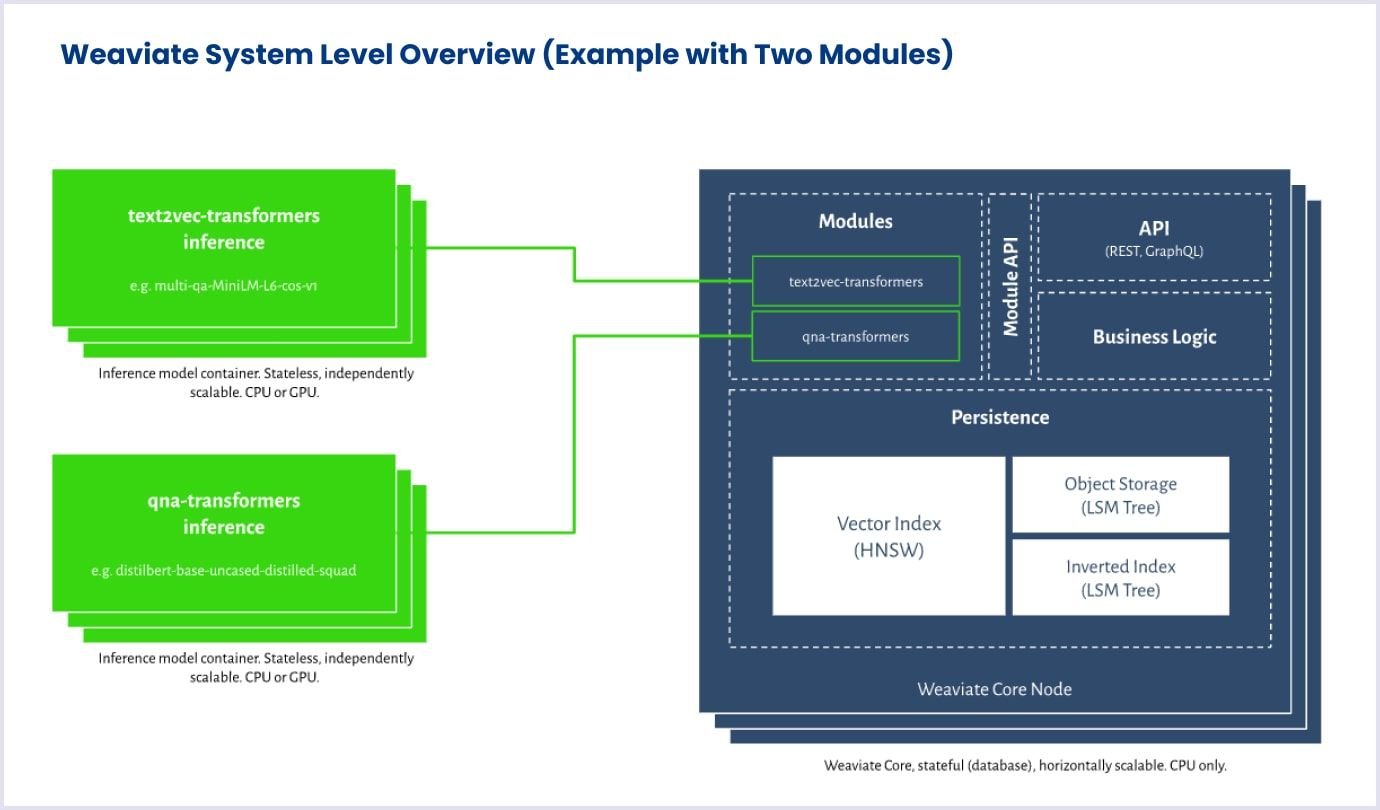

The image below presents a 30,000-foot view of Weaviate’s architecture. Two modules are given as examples. Also, the image presents Weaviate’s vector index, inverted index, and object storage, which show how data is organized and stored in Weaviate.

Source: Weaviate.io

Keyword search vs. semantic search

To understand the importance of vector databases like Weaviate, we should first understand their foundations. Vector databases use semantic search, which is also known as vector or similarity search. Meanwhile, a traditional search system uses keyword search. Why is this important? Because semantic search appeared as a relief in dealing with huge chunks of data that web solutions and businesses had accumulated so far.

Despite the undeniable advantages of keyword search, it lacks the benefits semantic search provides. Below, we discuss in more detail what these basic search methods are and how they help in searching for data.

Keyword search and relational databases: working method

This approach to searching documents is based on a data structure called an inverted index. The concept means that the index is arranged with words, and each word points to a set of documents. So you can find a document or a web page by a particular term.

The inverted index is widely used in search engines and database systems where efficient keyword search is needed. Thus, an inverted index helps to find the necessary items among thousands and millions of documents.

Instead of returning them randomly, search engines rank them by relevance. When prioritizing documents or web pages, a search system or search engine relies on the following aspects:

- Relevance score of each item in the search index. The relevance score shows the degree to which the document matches the user’s query.

- Ranking algorithms. They help search systems calculate relevance scores for documents. The algorithms are complex and may involve machine learning techniques.

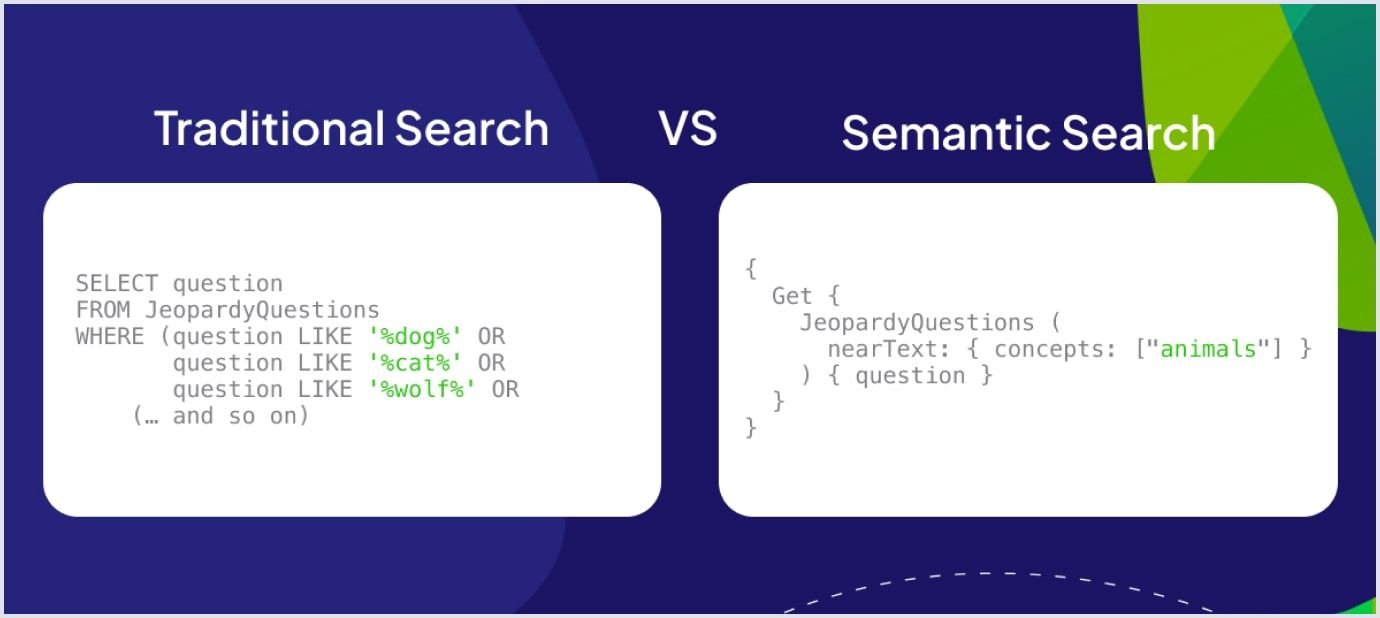

Keyword search is used in traditional relational databases that store data structured in columns. The downside of the keyword method is that you need to create long queries to find several keywords. For example, you need to type “dog”, “cat”, “wolf”, etc. in a query. Meanwhile, in a semantic search, you could simply type “animals”. The comparison below illustrates this difference

Source: Weaviate.io

Imagine how cumbersome such queries are when processing millions of data objects. That is where semantic search and vector databases come to help.

Semantic search and vector databases: driving mechanism

What is semantic search? This search engine technology interprets the meaning of words and phrases in queries. As a result, the semantic search engines will return results based on the meaning of a query rather than the characters in the query. Semantic search is also called vector or similarity search.

The semantic search engine returns the most relevant results by recognizing the search context and intent. If you want information or try purchasing, you will get different results based on semantic search meaning. For example, if you type “restaurant”, you will get a list of restaurants in your location. Or, if you type “Bertazzoni discounts”, you will get a list of stores and websites with the best discount offers for these appliances.

The power of semantic search comes from vectors, or vector embeddings, which are numbers representing data objects’ properties. The process is simple:

- A vector database’s embedding model transforms a data object (text, video, or audio) into a sequence of numbers (vector);

- A vector database maps or indexes those numbers and structures them in dimensions;

- Structured vectors are located at some distances in the vector space;

- A vector database’s algorithms calculate distances between vectors and find similarity. The closer the vectors are, the more similar the objects are.

Check out the video below for a quick review of what vector databases are and how you can use them.

Vectors can be stored in hundreds of dimensions, and vector databases can process millions of vectors. The technology of vectorization opened a world of magnificent opportunities. Vectors help developers build efficient search, recommendation, and prediction solutions for a relevant and personalized user experience.

Differences between keyword search and semantic search

These approaches to search are different in how they help retrieve information from databases or search engines. We summarized their differences in the table below for a convenient overview.

| Aspects | Keyword search | Semantic search |

| Matching criteria | Exact words or phrases (keywords) in the user’s search query. Finds documents matching the search query. | Meaning and search intent behind the user’s query. It finds documents even if they do not match the search query but match the meaning and intent. |

| Contextual understanding | It has limited context understanding and retrieves documents based on the presence of keywords without considering broader context or relationship. | It understands the context in which the search query is used. The semantic search considers synonyms, related contexts, and the user’s intent to find the relevant documents. |

| Relevance and precision | It delivers documents, some of which may not correspond to the user’s query. Users may need to refine their query to obtain relevant results. | It delivers more precise and relevant documents based on the user’s intent. This ensures a better search experience. |

| Handling ambiguity | Ambiguous words may be difficult for keyword search as it relies on exact query matches. It may not distinguish between different meanings of the same word. | It handles ambiguity as it considers context and can differentiate the word's or phrase’s meaning based on the context. |

| Natural language processing (NLP) | Typically, it does not attempt to understand the natural language structure of queries. So, it does not involve NLP techniques. | It often utilizes NLP and machine learning to interpret natural language queries, extract meaning, and generate more accurate contextual results. |

| User experience | It is straightforward and familiar to users who understand what query to enter and what results to expect. | It may require complex queries to express user intent accurately and return results that better match it. |

| Interpretability | A user can understand why specific results appear. Also, a developer can tune the algorithm based on such understanding. | It is not always obvious why specific results appear in a semantic search. |

| Documentation and testing | It has had years of testing, and many developers know how to integrate it. There is ample documentation, sample applications, and pre-built components for keyword search. | As the approach has been widely adopted recently, documentation and testing are in progress. Developers search for ways to optimize semantic search and improve its predictability. |

AI and semantic search

Artificial intelligence technologies are on the rise. Bloomberg predicts that the AI market will grow at a compound annual growth rate of 39.4% between 2022 and 2028.

Semantic search based on vector embeddings is used for generative AI systems. These are machine-learning models that generate content in response to text prompts. Such generated content can be text, images, audio, video, or code.

Mainly, semantic search is vital for content-generative systems called large language models (LLMs). Examples of LLMs are ChatGPT, LLaMA, LaMDA, and Bard. They have the potent capability of giving meaningful answers to users’ text prompts.

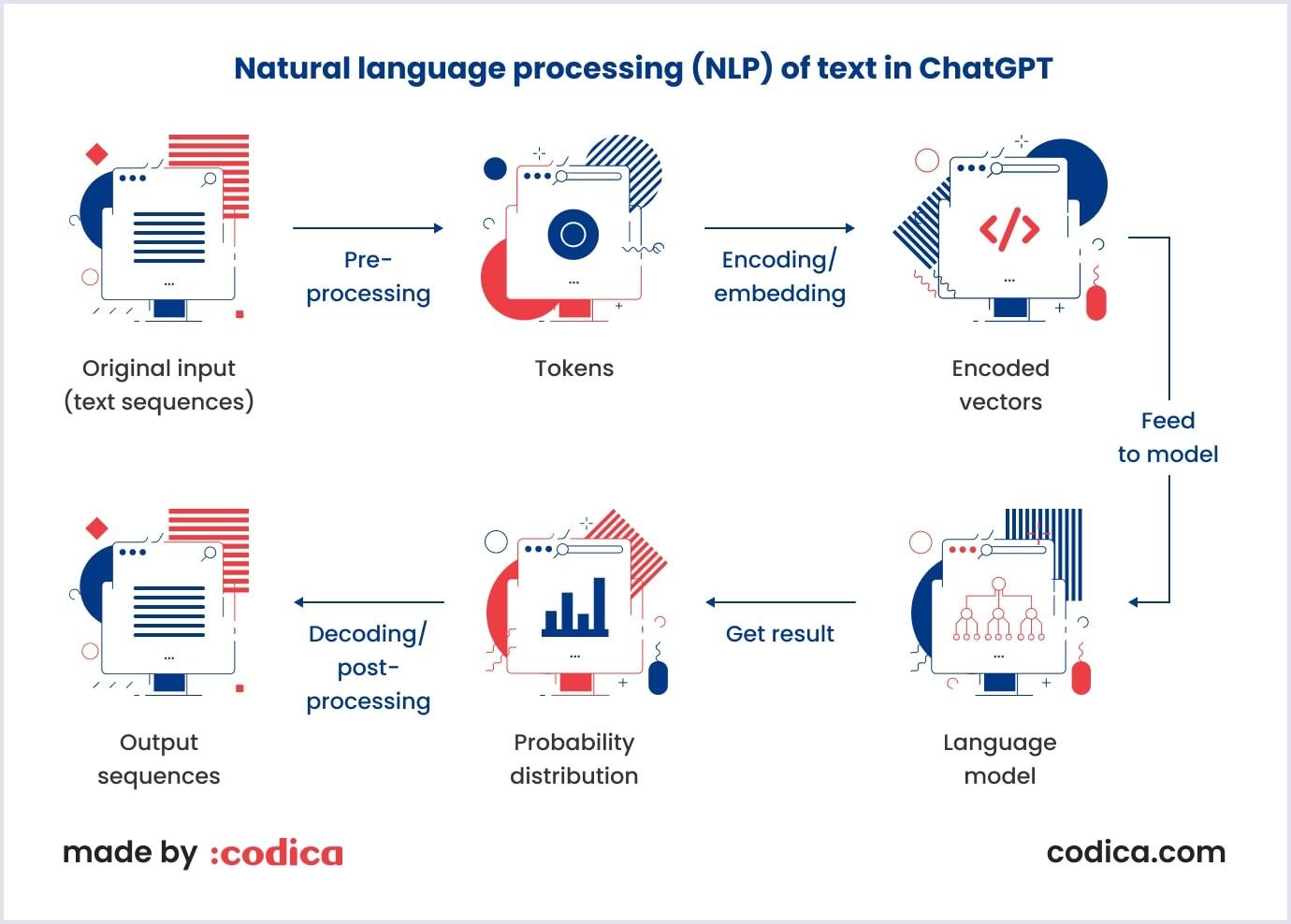

When processing a query, LLM first transforms it into a vector as a semantic representation of the query. Then, LLM compares the query vector to document vectors in the database and finds close, similar notions. The image below shows how ChatGPT works using natural language processing.

Despite the opportunities they unveil, LLMs have two significant limitations, which are as follows:

Large language models have a word limit

LLMs have limited memory, so you must train them to accept more than a few thousand words simultaneously. You can use vector embeddings that store word meaning in manageable chunks that LLM can process. The question is: how can you store embeddings? The answer is using a vector database.

Large language models have a time limit

For example, ChatGPT is trained on data before 2022. What happens if you ask it about recent events? There are two possibilities. The LLM either throws an answer about its inability to do what you want. Or worse, an LLM will give a hallucination, which is a confident but fake answer.

To solve this problem, developers connect LLMs to the internet, like with ChatGPT. Also, they use web scraping to extract data from websites and online resources. In this case, storing large datasets requires a vector database.

To sum up, LLM uses semantic search to process queries and return results. However, you need vector databases capable of storing large datasets and holding up-to-date information to give LLMs the data material to process in the long run.

Business value and benefits of integrating AI semantic search

In short, semantic search is vital to driving conversions and revenue. Understanding prospects’ needs and providing them with a better search experience will build trust in your brand. As customers see how simple and efficient their experience on your website or app is, they will likely return.

Semantic search gives customers a sense of care. Even though users come to browse and explore search results, many customers want to find specific items or information. By providing customers with an efficient search, you also give them time to spend reviewing your products and taking action. So, you get a higher average order value (AOV) and revenue per visitor (RPV).

Moreover, semantic search gives your customers personalized experiences. Suppose that your prospect searches for “tank top,” “swimwear,” or “running shoes” in your ecommerce marketplace solution. In this case, an intelligent ecommerce search engine will return results based on the user’s gender, search intent, and history.

Among e-commerce stores leveraging semantic search are Amazon, Walmart, Zappos, and eBay.

Read also: Top Programming Languages in 2023: Tech Trends for Business

How to integrate Weaviate into your solution

There are two options to integrate the Weaviate database into your solution: via an official client or an API. Currently, Weaviate’s official clients are Python, TypeScript, Java, and Go. There are also community-supported clients for .NET/C#, PHP, and Ruby.

Weaviate used to provide a JavaScript client, but it is no longer supported. So, Weaviate recommends switching to the TypeScript library instead.

We are a company specializing in JavaScript/TypeScript and Ruby. That is why we chose to share our expertise on how you can integrate Weaviate into your solution created with these technologies. First, we will discuss TypeScript and then reveal the Ruby integration.

Weaviate integration guide for TypeScript

In this section, we cover the prerequisites for Weaviate integration with TypeScript as presented in Weaviate documentation. They include installation and authentication. Let’s see how they work in Weaviate.

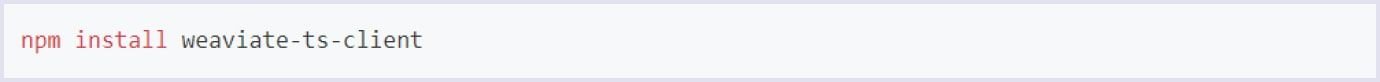

Installation

Step 1

Install and set up Weaviate with an npm package:

Step 2

Once setup, use the client in your TypeScript script as follows:

Authentication

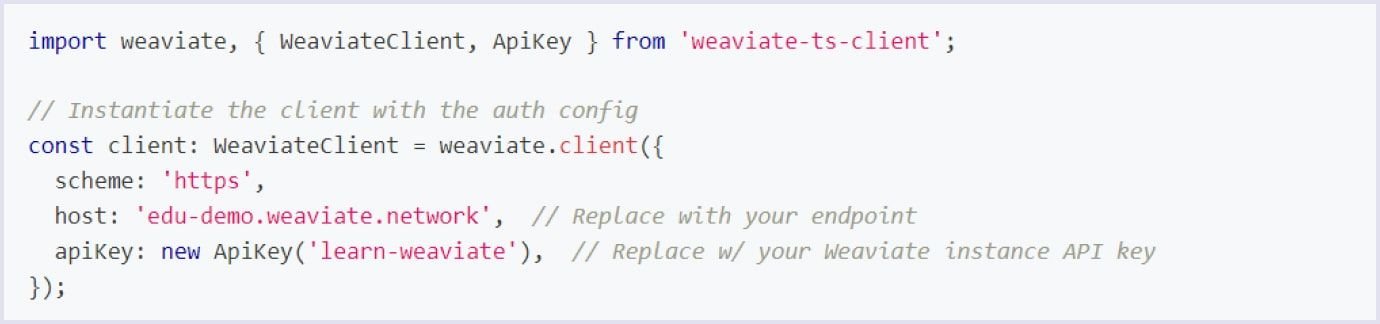

Weaviate provides a comprehensive review of authentication. Here, we will cover the main authentication steps for the Weaviate database.

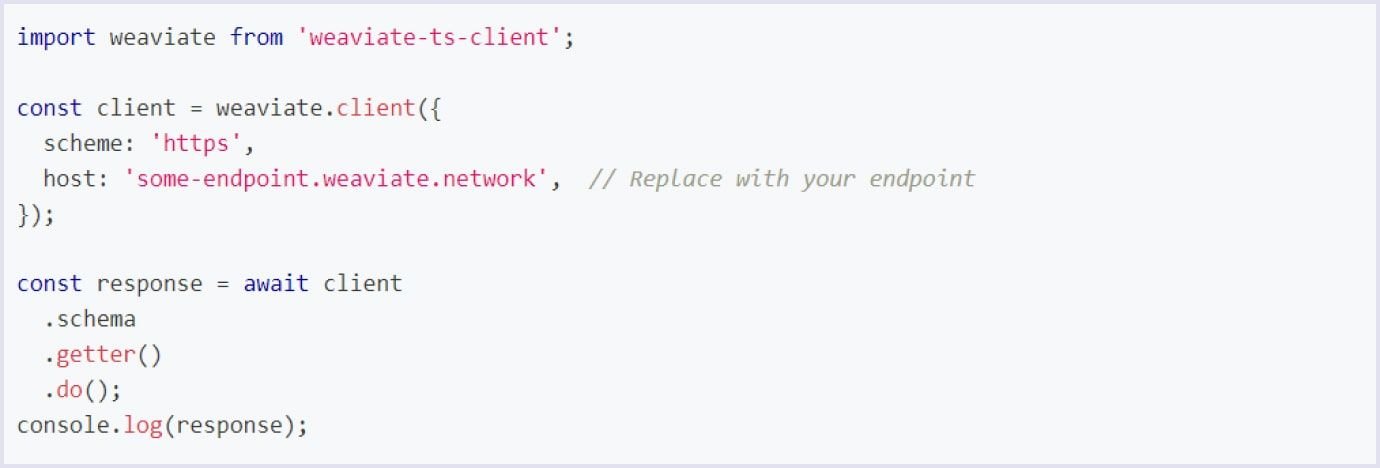

Weaviate Cloud Services (WCS) authentication

You have two options for authentication with Weaviate for a WSC instance:

- With an API key, which is a recommended method;

- With the account owner’s WCS username and password.

WSC instances have a free option with full admin access (read and write) and a paid option with a read-only and full-admin API key.

Authentication in WSC with an API key requires that each request holds the key in its header. To make it simple, use the API key with a Weaviate client at instantiation, as in the code below:

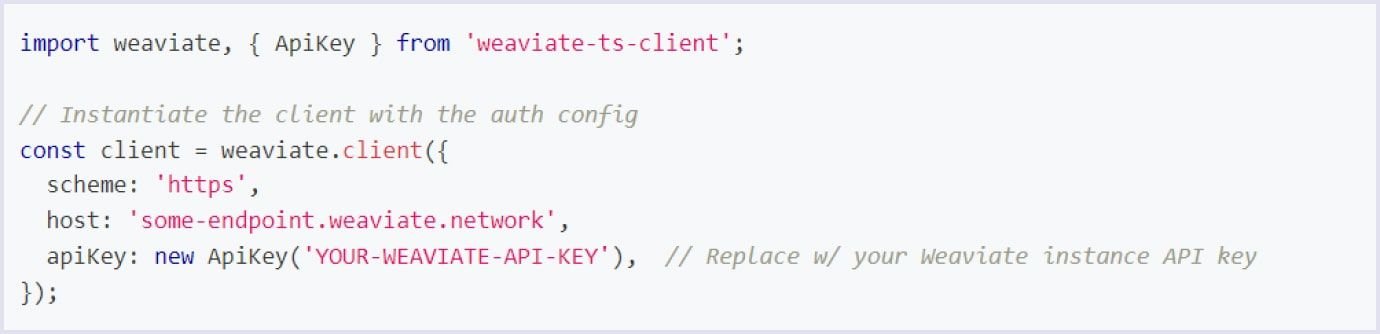

In case of authentication with your WCS username and password, include an OpenID Connect (OIDC) token in each request’s header. Use a Weaviate client at instantiation for this, as it is the easiest way.

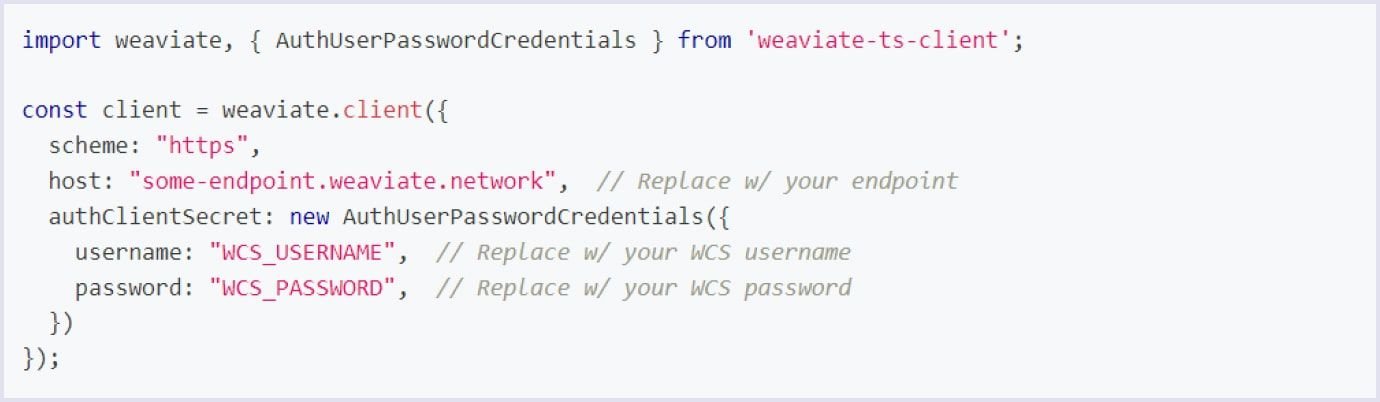

API key authentication in Weaviate

In this case, you need to instantiate the client. The code below presents the client instantiation for API key authentication in Weaviate:

OIDC authentication in Weaviate

This authentication in Weaviate uses flow-specific configuration. In the future, the Weaviate client will use this configuration to authenticate.

The configuration includes secret data you need to obtain an access token and a refresh token. The access token has a limited lifespan, so it is necessary to apply a refresh token to obtain new access tokens.

To learn more about Weaviate’s installation and configuration for TypeScript, check out the Weaviate guide for more details.

Weaviate integration guide for Ruby

Now, we will walk you through the process of integrating Weaviate for a Ruby-based solution. Let’s get right in.

Step 1. Set and configure the WSC instance

Create a database instance on WSC. Set WEAVIATE_URL and WEAVIATE_API_KEY according to values obtained from the Weaviate console.

Step 2. Set the OpenAI API key

Obtain the OPENAI_API_KEY environment variable here and set it.

Step 3. Add the Weaviate Ruby API client

Use the following snippet to add the Weaviate Ruby API client and configure it:

# Ruby wrapper for the Weaviate vector search database API

gem 'weaviate-ruby'require'weaviate'defweaviate_client@weaviate_client||= Weaviate::Client.new(url: Rails.application.credentials.dig(:weaviate,:cluster_url),api_key: Rails.application.credentials.dig(:weaviate,:api_key),model_service::openai,model_service_api_key: Rails.application.credentials.openai_access_token

)endStep 4. Create the schema with data

Insert the schema that will hold data:

weaviate_client.schema.create(class_name:'JobTitles',# Name of the collectiondescription:'A collection of job titles',# Description of the collectionvectorizer:'text2vec-openai',# OpenAI will be used to create vectorsmodule_config:{'qna-openai':{# WeaviateNew's OpenAI's Q&A modulemodel:'text-davinci-003',# OpenAI's LLM to be usedmaxTokens:3500,# Maximum number of tokens to generate in the completiontemperature:0.0,# How deterministic the output will betopP:1,# Nucleus samplingfrequencyPenalty:0.0,presencePenalty:0.0}},properties:[{dataType:['text'],description:'Job Title Name',name:'name'},{dataType:['text'],description:'Job Title Language Code',name:'language_code'},{dataType:['text'],description:'Job Title Description',name:'description'},{dataType:['text[]'],description:'Job Title skills',name:'skills'},{dataType:['int'],description:'Code of Job',name:'isco'}])Step 5. Index data from PostgreSQL to Weaviate

Create a rake task to batch add the data to Weaviate:

require'weaviate'

task export_job_titles::environmentdo

json_data =File.read('public/job_data.json')

objects =JSON.parse(json_data)

selected_objects = objects.select do|object|OCCUPATIONS_LANGUAGES.include?(object.dig('properties','language_code'))end

selected_objects.each_slice(CHUNK_SIZE)do|chunk|

weaviate_client.objects.batch_create(objects: chunk

)endendRun the rake task (rake import_to_weaviate) and confirm the successful importing of records:

weaviate_client.query.aggs(class_name:"JobTitles",fields:"meta { count }")# => [{ "meta" => { "count" => 100 }}]Step 6. Test the Weaviate search with ask: parameters

Ask a question with the ask: parameter, as in the following snippet:

weaviate_client.query.get(class_name:'JobTitles',limit:'1',fields:'name isco',ask:"{ question: \"#{message}\" }")To sum it up, we successfully integrated Weaviate into a Ruby solution and got answers to our questions. When we entered a query, Weaviate returned the results that related to the query even without matching explicit words. So, we found what we were looking for and even more than we expected.

Pinecone vs. Weaviate

These vector databases are the two most popular solutions for generative AI, recommendation, and search solutions. Their features are similar yet hold some differences presented in the table below.

| Parameters | Pinecone | Weaviate |

| Definition | Vector database management system (DBMS) | Vector database management system (DBMS) |

| Initial release | 2019 | 2019 |

| Built with a programming language | C/C++, Python | Go |

| Primary features | Semantic search | Keyword and semantic search |

| Availability | Paid cloud-based | Open-source and cloud-based |

| Official clients | Python, Node.js | Python, Go, TypeScript/JavaScript, Java |

| APIs and other access methods | RESTful HTTP API | GraphQL query language, RESTful HTTP/JSON API |

| Integrations | It has integrations like Amazon SageMaker, OpenAI, Hugging Face Inference Endpoints, Elasticsearch, LangChain, and more. | It has integrations with PaLM API, Auto-GPT, LangChain, and more. |

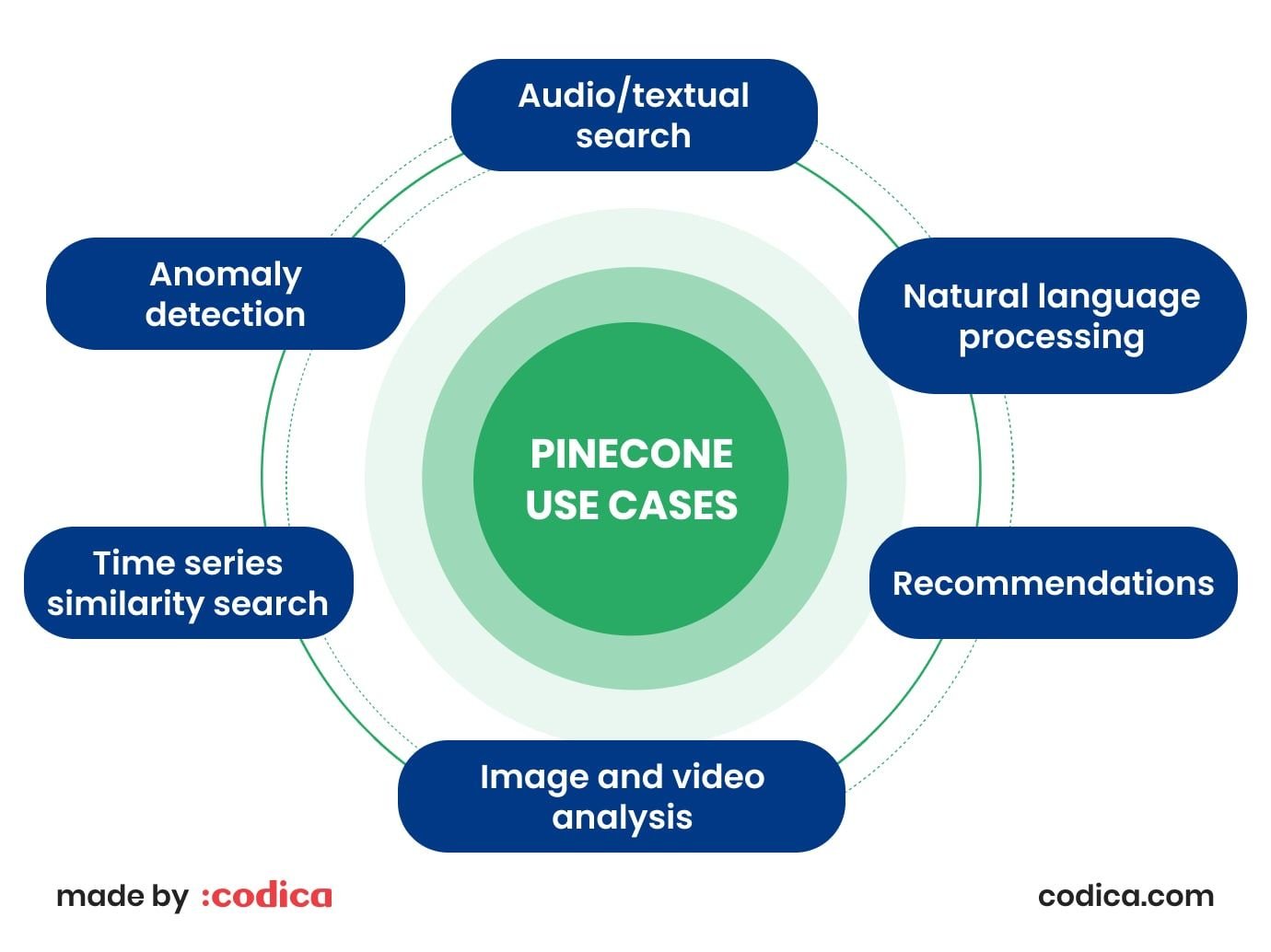

We can also see the difference in Pinecone’s and Weaviate’s use cases, enriching solutions with data. Let’s see in more detail what use cases both databases have.

Pinecone use cases

Audio/textual search. Pinecone makes available fully-deployment-ready search and similarity functionality for text and audio data with high dimensions.

Natural language processing. The technology uses AutoGPT to build context-aware solutions for text summarization, sentiment analysis, semantic search, and document classification.

Recommendations. The database provides capabilities for creating personalized recommendations and similar item recommendations.

Image and video analysis. Pinecone enables solutions to retrieve images and video faster. The option is used in real-life surveillance and image recognition.

Time series similarity search. The database helps store and search time-series vectorized data. For example, matching vectorized time series data helps find the most similar stock trends.

Anomaly detection. The Pinecone technology helps find displaced or anomalous data points. So, Pinecone can be used to detect suspicious transactions and cybersecurity breaches.

Weaviate use cases

Similarity search. The tool helps find similarities between modalities, such as texts and images, and their combinations. Also, a similarity search helps build recommendation systems in machine learning applications.

For example, Weaviate’s projects with similarity search currently include a movie recommender system, video caption search, and text-to-image search.

LLMs and search. Weaviate vector database provides search capabilities for large language models. They include GPT-3 and GPT-4 from OpenAI, LLaMA from Meta, and PaLM2 from Google. By providing semantic search features, Weaviate helps LLMs avoid their common limitations. For example, an LLM can output hallucinations when it gives confident but unjustified responses.

Now, Weaviate suggests open-source projects for retrieval-augmented generation, generative search, and generative feedback loops.

Classification. Weaviate vector search helps to perform real-time automatic classification of unseen concepts. Currently, Weaviate sets the projects for toxic comment and audio genre classification.

Spell checking. This use case presents Weaviate’s capability of checking spelling in raw texts. Using the Python spellchecker library, Weaviate’s module performs spellchecking operations. It analyzes the text, suggests the right option, and may force autocorrection.

Other Weaviate’s use cases. They include e-commerce search, recommendation engines, and automated data harmonization. Also, the database helps with anomaly discovery and cybersecurity threat analysis. So, Weaviate is beneficial for DevOps services as well.

For example, the video below presents how to create a recommendation system with AI and semantic search using Weaviate.

Other popular vector databases

If you consider handling different data types, you might also be interested in other alternatives to Weaviate. So, we collected a few of the most popular options for you below.

Chroma

It is an open-source vector database that provides resources for developers and organizations of any size to build large language models (LLMs). You can store, search, and retrieve high-dimensional vectors with this highly scalable solution.

Chroma has become so popular thanks to its flexibility. You can deploy it on-premises or in the cloud. The solution supports various data types and formats. So, developers use it for different applications, for example, in cross platform app development. Still, audio data is what Chroma processes the best. So, you can use Chroma for audio-based search engines, music recommendations, and other similar solutions.

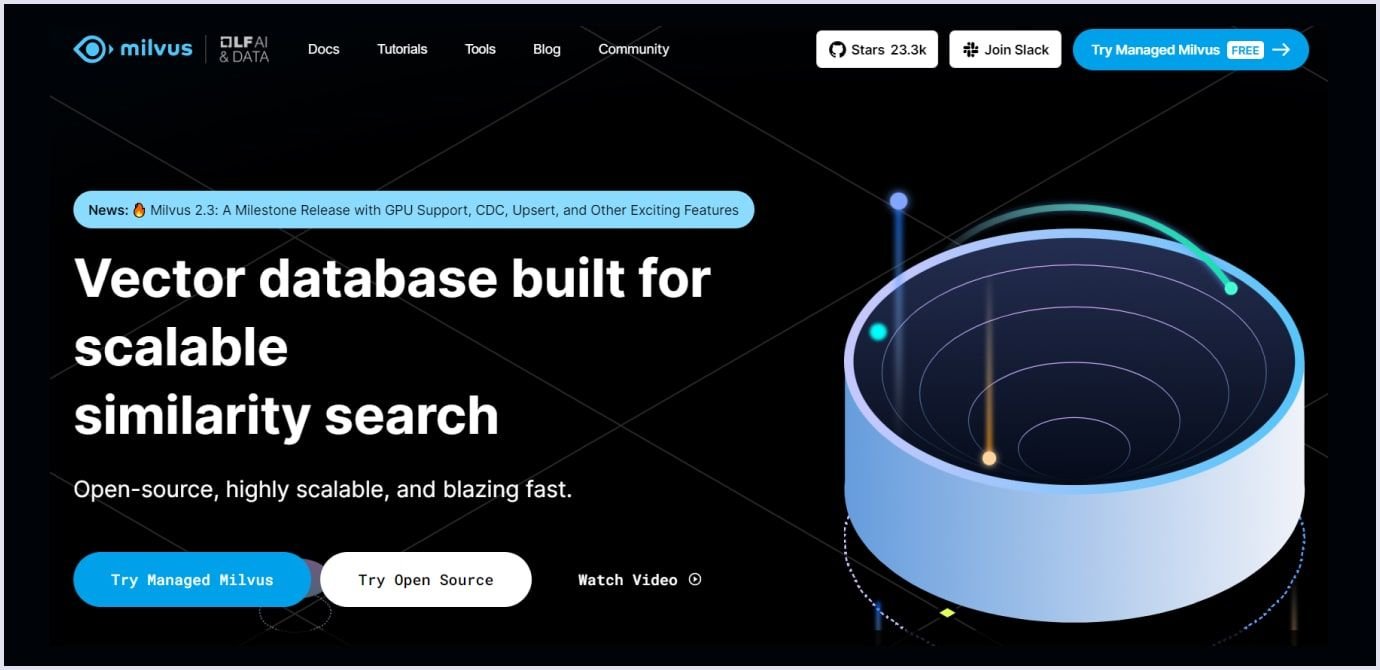

Milvus

This open-source vector database is popular in data science and machine learning. Milvus provides robust support for vector search and querying. It has outstanding algorithms that speed up the search and retrieval processes, even in large datasets.

Milvus is easily integrated with popular frameworks, such as PyTorch and TensorFlow. So, developers can integrate Milvus into current machine learning workflows.

The database is used in e-commerce solutions for recommendation systems. Milvus serves for object recognition, similarity search, and content-based image retrieval. Also, you can use it in natural language processing for document and text prompt processing.

Faiss (Facebook AI Similarity Search)

It is a library from Meta for similarity searches among multimedia documents. Also, it helps with indexing and searching large datasets in high-dimensional vectors.

The library helps optimize query time and memory consumption. Such optimization leads to efficient vector storage and retrieval. The results are excellent, even regarding hundreds of vector dimensions.

One of the critical applications of Faiss is image recognition. The library allows the building of large-scale search engines with indexing and searching for a wide range of images. Another use case for Faiss is semantic search systems. They will enable you to retrieve similar documents or text sections from many documents.

How Codica integrates AI search and Weaviate in HR tech project - case study

Recruitment apps are among many solutions that benefit from integration with a vector database. Nowadays, recruitment apps process loads of data. Such information relates to job requirements, candidates’ profiles, and human resources operations. Thanks to the power of semantic search, HR solutions with vector database integrations process data quickly and accurately.

At Codica, we harnessed vector technology to provide recruiters with the best user experience and results. Let’s see how we implemented Weaviate vector DB to help recruiters create jobs and connect with candidates.

The online recruitment platform has been on the market for 3 years. We created the platform as a progressive web application. This technology delivers convenient and functional solutions. The PWA helps hire skilled professionals from all over the world.

As time passed, the company decided to rebrand the platform and enhance its functionality. Regarding the bloom of AI search technologies and the capabilities they provide, we helped reformat the platform to bring more efficiency to its work. Now, the platform offers versatile functionality for automatic vacancy creation and posting.

Thanks to the recruitment app, HR specialists can post vacancies on many job platforms with just one click. When recruiters create a job posting, the specialty entered by an HR specialist must have a standardized name that can be accepted and published on job platforms.

That is why we introduced semantic search powered by ChatGPT and Weaviate. We use ChatGPT to vectorize search queries via an API. So, a recruiter sends job search queries that ChatGPT vectorizes. In this form, search queries get matched with standardized job positions contained in Weaviate.

Thanks to the Weaviate search, recruiters can enter the profession as they know it. The platform will return search results matching the user’s intent. So, if a recruiter enters “Project Manager,” “IT Project Manager,” “PM,” or “Product Manager,” search results will return Project Manager as a matching term. Or if a recruiter wants to indicate a React Developer in the job description, the app will suggest Frontend Developer as a standard for this profession.

The recruitment platform also suggests many automated functions via chat with an assistant to simplify the recruiter’s work when creating job descriptions. Leading through a several-step form, the assistant suggests a recruiter describe the following aspects:

- Profession category;

- Experience level;

- The main activities of the position;

- Must-have requirements for candidates;

- Benefits;

- Working hours per week;

- Salary range and more.

Based on the answers, the system generates a job description. We applied ChatGPT’s power to make suggestions for each aspect. So, recruiters spend less time and effort on creating a job description. Each field has two options: autocomplete and custom text if the recruiter wants to type.

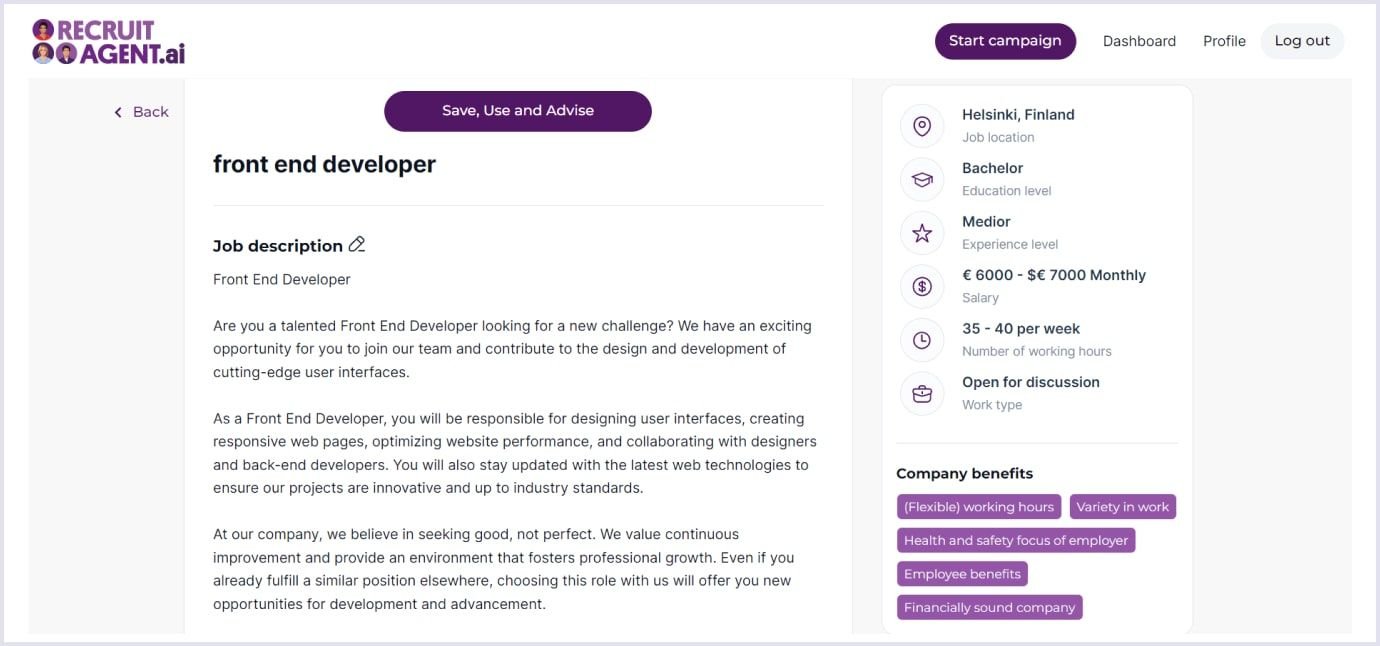

The image below shows what the vacancy looks like after the form completion.

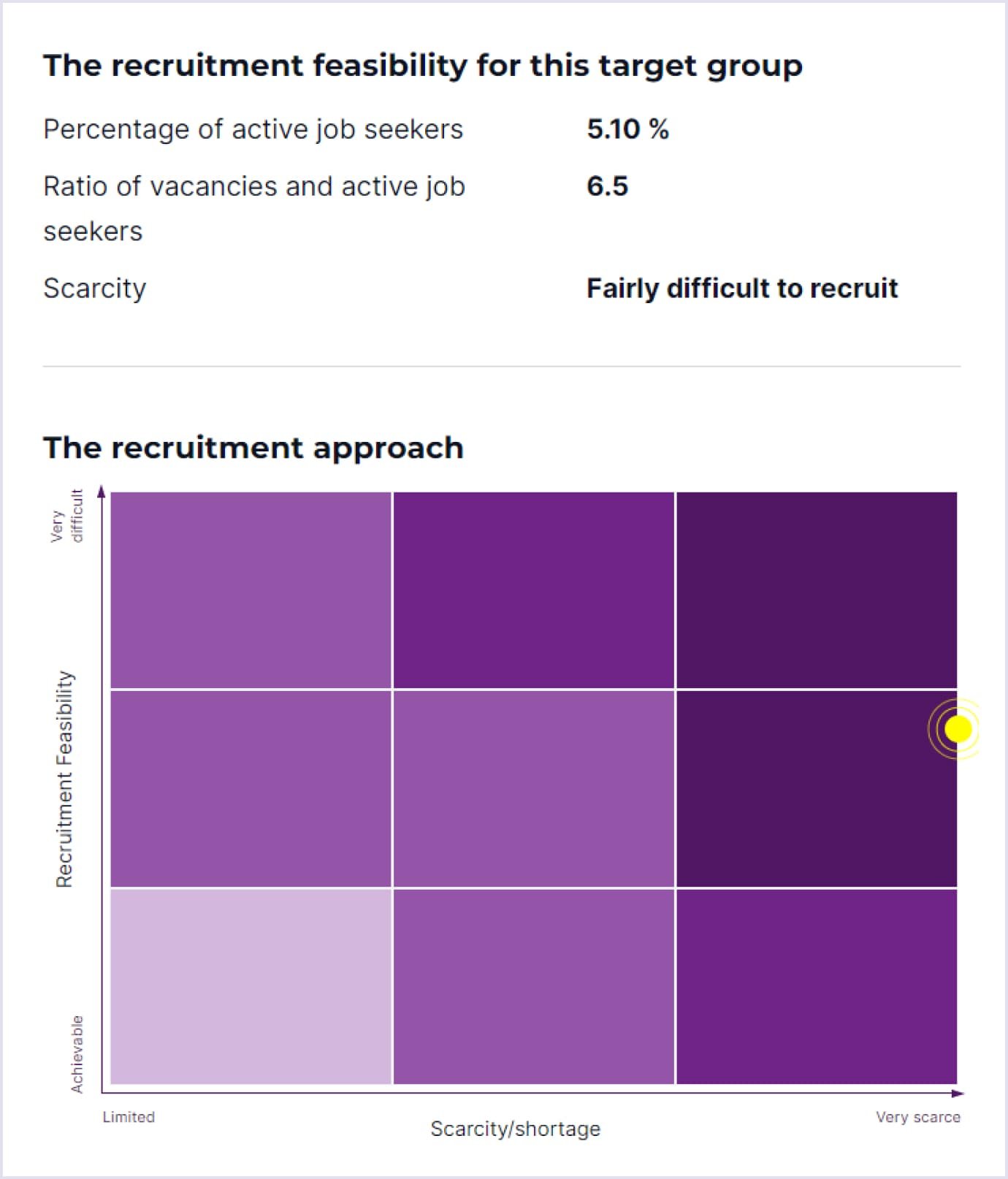

Moreover, the recruitment system provides HR specialists with a convenient analytical dashboard. It reflects the statistics on recruitment feasibility and the scarcity of finding a candidate. The darker the rectangle, the more challenging it is to find a candidate for a job.

Once the job is posted on the job portals, candidates can contact the recruiter by clicking the relevant vacancy link. The recruiter will get a message with a candidate's name, email, and necessary files. So, a recruiter will be able to contact and message the candidate.

These are the basic features empowered by ChatGPT and Weaviate. The platform continues to scale and gets other efficient features to make recruiters’ work smooth and easy.

Check our portfolio for more projects we have delivered over eight years of our experience.

You may also like: How Much Does It Cost to Build a Website Like Indeed

On a closing note

Weaviate and other vector databases enrich solutions with extended capabilities thanks to semantic search. Regarding the user’s intent, semantic search helps retrieve relevant results that match the user’s interests. Despite typos or incorrect query input, a system improved with semantic search will return results that match the user’s needs.

Based on semantic search and storing vectors, Weaviate automates processes for similarity search, recommendations, anomaly detection, cybersecurity threat analysis, and more. Integrating Weaviate into your solution will ensure the best user experience, leading to higher conversions.

If you want to use Weaviate in your project, contact us. Our experts are eager to help you with the vector database integration for your solution’s better performance.